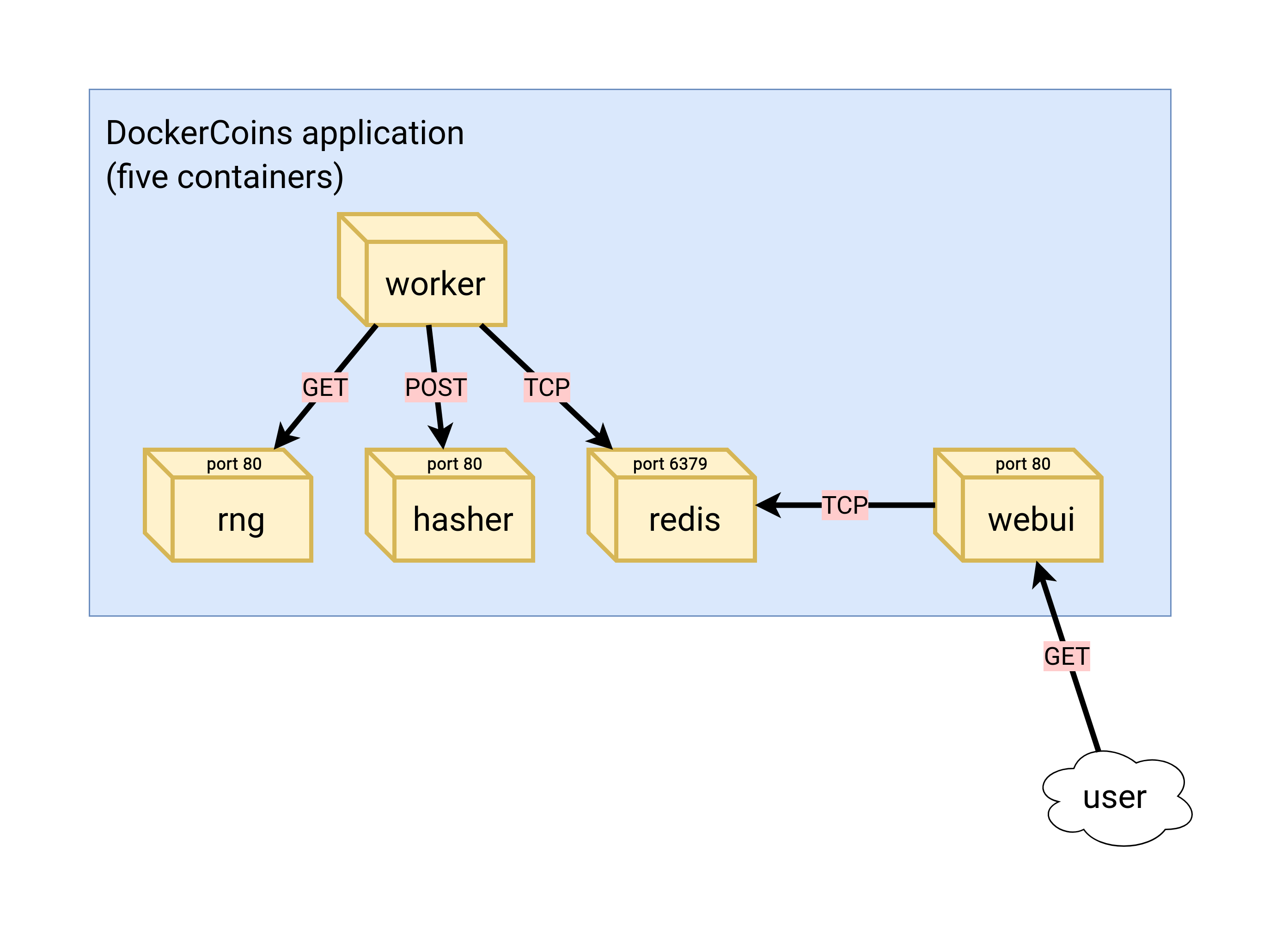

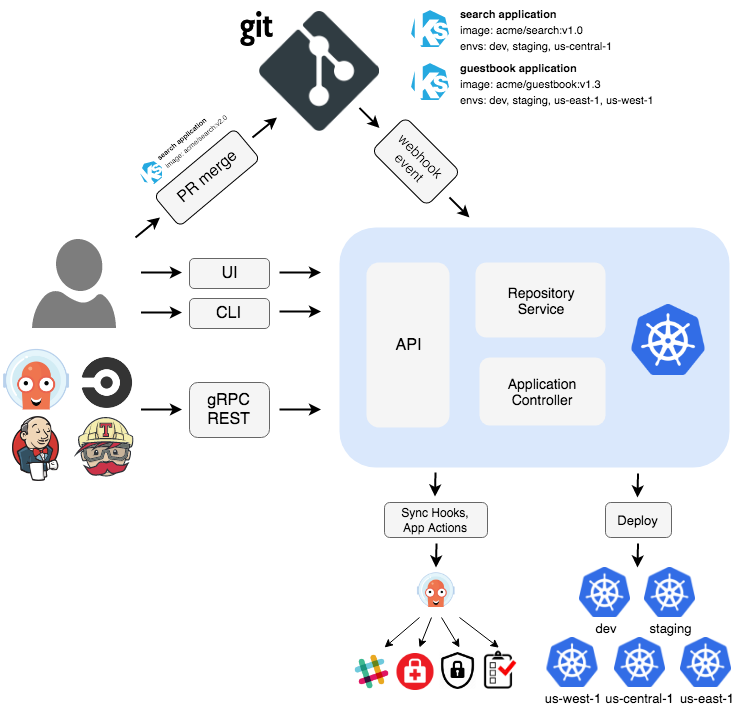

class: title, self-paced Packaging d'applications<br/>pour Kubernetes<br/> .nav[*Self-paced version*] .debug[ ``` ``` These slides have been built from commit: 5f55313 [shared/title.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/shared/title.md)] --- class: title, in-person Packaging d'applications<br/>pour Kubernetes<br/><br/></br> .footnote[ **Slides[:](https://www.youtube.com/watch?v=h16zyxiwDLY) https://2025-01-enix.container.training/** ] <!-- WiFi: **Something**<br/> Password: **Something** **Be kind to the WiFi!**<br/> *Use the 5G network.* *Don't use your hotspot.*<br/> *Don't stream videos or download big files during the workshop*<br/> *Thank you!* --> .debug[[shared/title.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/shared/title.md)] --- ## Introductions (en 🇫🇷) - Bonjour ! - Sur scène : Jérôme ([@jpetazzo@hachyderm.io]) - En backstage : Alexandre, Antoine, Aurélien (x2), Benjamin, David, Kostas, Nicolas, Paul, Sébastien, Thibault... - Horaires : tous les jours de 9h à 13h - On fera une pause vers (environ) 11h - N'hésitez pas à poser un maximum de questions! - Utilisez [Mattermost](https://training.enix.io/mattermost) pour les questions, demander de l'aide, etc. [@alexbuisine]: https://twitter.com/alexbuisine [EphemeraSearch]: https://ephemerasearch.com/ [@jpetazzo]: https://twitter.com/jpetazzo [@jpetazzo@hachyderm.io]: https://hachyderm.io/@jpetazzo [@s0ulshake]: https://twitter.com/s0ulshake [Quantgene]: https://www.quantgene.com/ .debug[[logistics.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/logistics.md)] --- ## Les 15 minutes du matin - Chaque jour, on commencera à 9h par une mini-présentation de 15 minutes (sur un sujet choisi ensemble, pas forcément en relation avec la formation!) - L'occasion de s'échauffer les neurones avec 🥐/☕️/🍊 (avant d'attaquer les choses sérieuses) - Puis à 9h15 on rentre dans le vif du sujet .debug[[logistics.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/logistics.md)] --- ## Travaux pratiques - À la fin de chaque matinée, il y a un exercice pratique concret (pour mettre en œuvre ce qu'on a vu) - Les exercices font partie de la formation ! - Ils sont prévus pour prendre entre 15 minutes et 2 heures (selon les connaissances et l'aisance de chacun·e) - Chaque matinée commencera avec un passage en revue de l'exercice de la veille - On est là pour vous aider si vous bloquez sur un exercice ! .debug[[logistics.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/logistics.md)] --- ## Allô Docker¹ ? - Chaque après-midi : une heure de questions/réponses ouvertes ! (sauf le dernier jour) - Une heure de questions/réponses ouvertes ! - Jeudi: 16h00-17h00 - Vendredi: 15h00-16h00 - Lundi: 15h30-16h30 - Sur [Jitsi][jitsi] (lien "visioconf" sur le portail de formation) .footnote[¹Clin d'œil à l'excellent ["Quoi de neuf Docker?"][qdnd] de l'excellent [Nicolas Deloof][ndeloof] 🙂] [qdnd]: https://www.youtube.com/channel/UCOAhkxpryr_BKybt9wIw-NQ [ndeloof]: https://github.com/ndeloof [jitsi]: https://training.enix.io/jitsi-magic/jitsi.container.training/Janvier2025 .debug[[logistics.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/logistics.md)] --- ## A brief introduction - This was initially written by [Jérôme Petazzoni](https://twitter.com/jpetazzo) to support in-person, instructor-led workshops and tutorials - Credit is also due to [multiple contributors](https://github.com/jpetazzo/container.training/graphs/contributors) — thank you! - You can also follow along on your own, at your own pace - We included as much information as possible in these slides - We recommend having a mentor to help you ... - ... Or be comfortable spending some time reading the Kubernetes [documentation](https://kubernetes.io/docs/) ... - ... And looking for answers on [StackOverflow](http://stackoverflow.com/questions/tagged/kubernetes) and other outlets .debug[[k8s/intro.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/intro.md)] --- class: self-paced ## Hands on, you shall practice - Nobody ever became a Jedi by spending their lives reading Wookiepedia - Likewise, it will take more than merely *reading* these slides to make you an expert - These slides include *tons* of demos, exercises, and examples - They assume that you have access to a Kubernetes cluster - If you are attending a workshop or tutorial: <br/>you will be given specific instructions to access your cluster - If you are doing this on your own: <br/>the first chapter will give you various options to get your own cluster .debug[[k8s/intro.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/intro.md)] --- ## Accessing these slides now - We recommend that you open these slides in your browser: https://2025-01-enix.container.training/ - This is a public URL, you're welcome to share it with others! - Use arrows to move to next/previous slide (up, down, left, right, page up, page down) - Type a slide number + ENTER to go to that slide - The slide number is also visible in the URL bar (e.g. .../#123 for slide 123) .debug[[shared/about-slides.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/shared/about-slides.md)] --- ## These slides are open source - The sources of these slides are available in a public GitHub repository: https://github.com/jpetazzo/container.training - These slides are written in Markdown - You are welcome to share, re-use, re-mix these slides - Typos? Mistakes? Questions? Feel free to hover over the bottom of the slide ... .footnote[👇 Try it! The source file will be shown and you can view it on GitHub and fork and edit it.] <!-- .lab[ ```open https://github.com/jpetazzo/container.training/tree/master/slides/common/about-slides.md``` ] --> .debug[[shared/about-slides.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/shared/about-slides.md)] --- ## Accessing these slides later - Slides will remain online so you can review them later if needed (let's say we'll keep them online at least 1 year, how about that?) - You can download the slides using this URL: https://2025-01-enix.container.training/slides.zip (then open the file `3.yml.html`) - You can also generate a PDF of the slides (by printing them to a file; but be patient with your browser!) .debug[[shared/about-slides.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/shared/about-slides.md)] --- ## These slides are constantly updated - Feel free to check the GitHub repository for updates: https://github.com/jpetazzo/container.training - Look for branches named YYYY-MM-... - You can also find specific decks and other resources on: https://container.training/ .debug[[shared/about-slides.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/shared/about-slides.md)] --- class: extra-details ## Extra details - This slide has a little magnifying glass in the top left corner - This magnifying glass indicates slides that provide extra details - Feel free to skip them if: - you are in a hurry - you are new to this and want to avoid cognitive overload - you want only the most essential information - You can review these slides another time if you want, they'll be waiting for you ☺ .debug[[shared/about-slides.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/shared/about-slides.md)] --- ## Pre-requirements - Kubernetes concepts (pods, deployments, services, labels, selectors) - Hands-on experience working with containers (building images, running them; doesn't matter how exactly) - Familiarity with the UNIX command-line (navigating directories, editing files, using `kubectl`) .debug[[k8s/prereqs-advanced.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/prereqs-advanced.md)] --- class: title *Tell me and I forget.* <br/> *Teach me and I remember.* <br/> *Involve me and I learn.* Misattributed to Benjamin Franklin [(Probably inspired by Chinese Confucian philosopher Xunzi)](https://www.barrypopik.com/index.php/new_york_city/entry/tell_me_and_i_forget_teach_me_and_i_may_remember_involve_me_and_i_will_lear/) .debug[[shared/handson.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/shared/handson.md)] --- ## Hands-on sections - There will be *a lot* of examples and demos - We are going to build, ship, and run containers (and sometimes, clusters!) - If you want, you can run all the examples and demos in your environment (but you don't have to; it's up to you!) - All hands-on sections are clearly identified, like the gray rectangle below .lab[ - This is a command that we're gonna run: ```bash echo hello world ``` ] .debug[[shared/handson.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/shared/handson.md)] --- class: in-person ## Where are we going to run our containers? .debug[[shared/handson.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/shared/handson.md)] --- class: in-person, pic  .debug[[shared/handson.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/shared/handson.md)] --- ## If you're attending a live training or workshop - Each person gets a private lab environment (depending on the scenario, this will be one VM, one cluster, multiple clusters...) - The instructor will tell you how to connect to your environment - Your lab environments will be available for the duration of the workshop (check with your instructor to know exactly when they'll be shutdown) .debug[[shared/handson.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/shared/handson.md)] --- ## Running your own lab environments - If you are following a self-paced course... - Or watching a replay of a recorded course... - ...You will need to set up a local environment for the labs - If you want to deliver your own training or workshop: - deployment scripts are available in the [prepare-labs] directory - you can use them to automatically deploy many lab environments - they support many different infrastructure providers [prepare-labs]: https://github.com/jpetazzo/container.training/tree/main/prepare-labs .debug[[shared/handson.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/shared/handson.md)] --- class: in-person ## Why don't we run containers locally? - Installing this stuff can be hard on some machines (32 bits CPU or OS... Laptops without administrator access... etc.) - *"The whole team downloaded all these container images from the WiFi! <br/>... and it went great!"* (Literally no-one ever) - All you need is a computer (or even a phone or tablet!), with: - an Internet connection - a web browser - an SSH client .debug[[shared/handson.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/shared/handson.md)] --- class: in-person ## SSH clients - On Linux, OS X, FreeBSD... you are probably all set - On Windows, get one of these: - [putty](http://www.putty.org/) - Microsoft [Win32 OpenSSH](https://github.com/PowerShell/Win32-OpenSSH/wiki/Install-Win32-OpenSSH) - [Git BASH](https://git-for-windows.github.io/) - [MobaXterm](http://mobaxterm.mobatek.net/) - On Android, [JuiceSSH](https://juicessh.com/) ([Play Store](https://play.google.com/store/apps/details?id=com.sonelli.juicessh)) works pretty well - Nice-to-have: [Mosh](https://mosh.org/) instead of SSH, if your Internet connection tends to lose packets .debug[[shared/handson.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/shared/handson.md)] --- class: in-person, extra-details ## What is this Mosh thing? *You don't have to use Mosh or even know about it to follow along. <br/> We're just telling you about it because some of us think it's cool!* - Mosh is "the mobile shell" - It is essentially SSH over UDP, with roaming features - It retransmits packets quickly, so it works great even on lossy connections (Like hotel or conference WiFi) - It has intelligent local echo, so it works great even in high-latency connections (Like hotel or conference WiFi) - It supports transparent roaming when your client IP address changes (Like when you hop from hotel to conference WiFi) .debug[[shared/handson.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/shared/handson.md)] --- class: in-person, extra-details ## Using Mosh - To install it: `(apt|yum|brew) install mosh` - It has been pre-installed on the VMs that we are using - To connect to a remote machine: `mosh user@host` (It is going to establish an SSH connection, then hand off to UDP) - It requires UDP ports to be open (By default, it uses a UDP port between 60000 and 61000) .debug[[shared/handson.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/shared/handson.md)] --- ## WebSSH - The virtual machines are also accessible via WebSSH - This can be useful if: - you can't install an SSH client on your machine - SSH connections are blocked (by firewall or local policy) - To use WebSSH, connect to the IP address of the remote VM on port 1080 (each machine runs a WebSSH server) - Then provide the login and password indicated on your card .debug[[shared/webssh.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/shared/webssh.md)] --- ## Good to know - WebSSH uses WebSocket - If you're having connections issues, try to disable your HTTP proxy (many HTTP proxies can't handle WebSocket properly) - Most keyboard shortcuts should work, except Ctrl-W (as it is hardwired by the browser to "close this tab") .debug[[shared/webssh.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/shared/webssh.md)] --- class: in-person ## Testing the connection to our lab environment .lab[ - Connect to your lab environment with your SSH client: ```bash ssh `user`@`A.B.C.D` ssh -p `32323` `user`@`A.B.C.D` ``` (Make sure to replace the highlighted values with the ones provided to you!) <!-- ```bash for N in $(awk '/\Wnode/{print $2}' /etc/hosts); do ssh -o StrictHostKeyChecking=no $N true done ``` ```bash ### FIXME find a way to reset the cluster, maybe? ``` --> ] You should see a prompt looking like this: ``` [A.B.C.D] (...) user@machine ~ $ ``` If anything goes wrong — ask for help! .debug[[shared/connecting.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/shared/connecting.md)] --- class: in-person ## `tailhist` - The shell history of the instructor is available online in real time - The instructor will provide you a "magic URL" (typically, the instructor's lab address on port 1088 or 30088) - Open that URL in your browser and you should see the history - The history is updated in real time (using a WebSocket connection) - It should be green when the WebSocket is connected (if it turns red, reloading the page should fix it) .debug[[shared/connecting.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/shared/connecting.md)] --- ## Doing or re-doing the workshop on your own? - Use something like [Play-With-Docker](https://labs.play-with-docker.com/) or [Play-With-Kubernetes](https://training.play-with-kubernetes.com/) Zero setup effort; but environment are short-lived and might have limited resources - Create your own cluster (local or cloud VMs) Small setup effort; small cost; flexible environments - Create a bunch of clusters for you and your friends ([instructions](https://github.com/jpetazzo/container.training/tree/main/prepare-labs)) Bigger setup effort; ideal for group training .debug[[shared/connecting.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/shared/connecting.md)] --- ## For a consistent Kubernetes experience ... - If you are using your own Kubernetes cluster, you can use [jpetazzo/shpod](https://github.com/jpetazzo/shpod) - `shpod` provides a shell running in a pod on your own cluster - It comes with many tools pre-installed (helm, stern...) - These tools are used in many demos and exercises in these slides - `shpod` also gives you completion and a fancy prompt - It can also be used as an SSH server if needed .debug[[shared/connecting.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/shared/connecting.md)] --- class: self-paced ## Get your own Docker nodes - If you already have some Docker nodes: great! - If not: let's get some thanks to Play-With-Docker .lab[ - Go to https://labs.play-with-docker.com/ - Log in - Create your first node <!-- ```open https://labs.play-with-docker.com/``` --> ] You will need a Docker ID to use Play-With-Docker. (Creating a Docker ID is free.) .debug[[shared/connecting.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/shared/connecting.md)] --- ## We don't need to connect to ALL the nodes - If your cluster has multiple nodes (e.g. `node1`, `node2`, ...): unless instructed, **all commands must be run from the first node** - We don't need to check out/copy code or manifests on other nodes - During normal operations, we do not need access to the other nodes (but we could log into these nodes to troubleshoot or examine stuff) .debug[[shared/connecting.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/shared/connecting.md)] --- ## Terminals Once in a while, the instructions will say: <br/>"Open a new terminal." There are multiple ways to do this: - create a new window or tab on your machine, and SSH into the VM; - use screen or tmux on the VM and open a new window from there. You are welcome to use the method that you feel the most comfortable with. .debug[[shared/connecting.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/shared/connecting.md)] --- ## Tmux cheat sheet (basic) [Tmux](https://en.wikipedia.org/wiki/Tmux) is a terminal multiplexer like `screen`. *You don't have to use it or even know about it to follow along. <br/> But some of us like to use it to switch between terminals. <br/> It has been preinstalled on your workshop nodes.* - You can start a new session with `tmux` <br/> (or resume or share an existing session with `tmux attach`) - Then use these keyboard shortcuts: - Ctrl-b c → creates a new window - Ctrl-b n → go to next window - Ctrl-b p → go to previous window - Ctrl-b " → split window top/bottom - Ctrl-b % → split window left/right - Ctrl-b arrows → navigate within split windows .debug[[shared/connecting.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/shared/connecting.md)] --- ## Tmux cheat sheet (advanced) - Ctrl-b d → detach session <br/> (resume it later with `tmux attach`) - Ctrl-b Alt-1 → rearrange windows in columns - Ctrl-b Alt-2 → rearrange windows in rows - Ctrl-b , → rename window - Ctrl-b Ctrl-o → cycle pane position (e.g. switch top/bottom) - Ctrl-b PageUp → enter scrollback mode <br/> (use PageUp/PageDown to scroll; Ctrl-c or Enter to exit scrollback) .debug[[shared/connecting.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/shared/connecting.md)] --- name: toc-part-1 ## Part 1 - [Our demo apps](#toc-our-demo-apps) - [Kustomize](#toc-kustomize) - [Managing stacks with Helm](#toc-managing-stacks-with-helm) - [Helm chart format](#toc-helm-chart-format) - [Creating a basic chart](#toc-creating-a-basic-chart) - [Exercise — Helm Charts](#toc-exercise--helm-charts) .debug[(auto-generated TOC)] --- name: toc-part-2 ## Part 2 - [Creating better Helm charts](#toc-creating-better-helm-charts) - [Charts using other charts](#toc-charts-using-other-charts) - [Helm and invalid values](#toc-helm-and-invalid-values) - [Helm secrets](#toc-helm-secrets) - [Exercise — Umbrella Charts](#toc-exercise--umbrella-charts) .debug[(auto-generated TOC)] --- name: toc-part-3 ## Part 3 - [Managing our stack with `helmfile`](#toc-managing-our-stack-with-helmfile) - [YTT](#toc-ytt) - [Git-based workflows (GitOps)](#toc-git-based-workflows-gitops) - [FluxCD](#toc-fluxcd) - [ArgoCD](#toc-argocd) .debug[(auto-generated TOC)] .debug[[shared/toc.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/shared/toc.md)] --- class: pic .interstitial[] --- name: toc-our-demo-apps class: title Our demo apps .nav[ [Previous part](#toc-) | [Back to table of contents](#toc-part-1) | [Next part](#toc-kustomize) ] .debug[(automatically generated title slide)] --- # Our demo apps - We are going to use a few demo apps for demos and labs - Let's get acquainted with them before we dive in! .debug[[k8s/demo-apps.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/demo-apps.md)] --- ## The `color` app - Image name: `jpetazzo/color`, `ghcr.io/jpetazzo/color` - Available for linux/amd64, linux/arm64, linux/arm/v7 platforms - HTTP server listening on port 80 - Serves a web page with a single line of text - The background of the page is derived from the hostname (e.g. if the hostname is `blue-xyz-123`, the background is `blue`) - The web page is "curl-friendly" (it contains `\r` characters to hide HTML tags and declutter the output) .debug[[k8s/demo-apps.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/demo-apps.md)] --- ## The `color` app in action - Create a Deployment called `blue` using image `jpetazzo/color` - Expose that Deployment with a Service - Connect to the Service with a web browser - Connect to the Service with `curl` .debug[[k8s/demo-apps.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/demo-apps.md)] --- ## Dockercoins - App with 5 microservices: - `worker` (runs an infinite loop connecting to the other services) - `rng` (web service; generates random numbers) - `hasher` (web service; computes SHA sums) - `redis` (holds a single counter incremented by the `worker` at each loop) - `webui` (web app; displays a graph showing the rate of increase of the counter) - Uses a mix of Node, Python, Ruby - Very simple components (approx. 50 lines of code for the most complicated one) .debug[[k8s/demo-apps.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/demo-apps.md)] --- class: pic  .debug[[k8s/demo-apps.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/demo-apps.md)] --- ## Deploying Dockercoins - Pre-built images available as `dockercoins/<component>:v0.1` (e.g. `dockercoins/worker:v0.1`) - Containers "discover" each other through DNS (e.g. worker connects to `http://hasher/`) - A Kubernetes YAML manifest is available in *the* repo .debug[[k8s/demo-apps.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/demo-apps.md)] --- ## The repository - When we refer to "the" repository, it means: https://github.com/jpetazzo/container.training - It hosts slides, demo apps, deployment scripts... - All the sample commands, labs, etc. will assume that it's available in: `~/container.training` - Let's clone the repo in our environment! .debug[[k8s/demo-apps.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/demo-apps.md)] --- ## Cloning the repo .lab[ - There is a convenient shortcut to clone the repository: ```bash git clone https://container.training ``` ] While the repository clones, fork it, star it ~~subscribe and hit the bell!~~ .debug[[k8s/demo-apps.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/demo-apps.md)] --- ## Running Dockercoins - All the Kubernetes manifests are in the `k8s` subdirectory - This directory has a `dockercoins.yaml` manifest .lab[ - Deploy Dockercoins: ```bash kubectl apply -f ~/container.training/k8s/dockercoins.yaml ``` ] - The `webui` is exposed with a `NodePort` service - Connect to it (through the `NodePort` or `port-forward`) - Note, it might take a minute for the worker to start .debug[[k8s/demo-apps.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/demo-apps.md)] --- ## Details - If the `worker` Deployment is scaled up, the graph should go up - The `rng` Service is meant to be a bottleneck (capping the graph to 10/second until `rng` is scaled up) - There is artificial latency in the different services (so that the app doesn't consume CPU/RAM/network) .debug[[k8s/demo-apps.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/demo-apps.md)] --- ## More colors - The repository also contains a `rainbow.yaml` manifest - It creates three namespaces (`blue`, `green`, `red`) - In each namespace, there is an instance of the `color` app (we can use that later to do *literal* blue-green deployment!) .debug[[k8s/demo-apps.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/demo-apps.md)] --- class: pic .interstitial[] --- name: toc-kustomize class: title Kustomize .nav[ [Previous part](#toc-our-demo-apps) | [Back to table of contents](#toc-part-1) | [Next part](#toc-managing-stacks-with-helm) ] .debug[(automatically generated title slide)] --- # Kustomize - Kustomize lets us transform Kubernetes resources: *YAML + kustomize → new YAML* - Starting point = valid resource files (i.e. something that we could load with `kubectl apply -f`) - Recipe = a *kustomization* file (describing how to transform the resources) - Result = new resource files (that we can load with `kubectl apply -f`) .debug[[k8s/kustomize.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/kustomize.md)] --- ## Pros and cons - Relatively easy to get started (just get some existing YAML files) - Easy to leverage existing "upstream" YAML files (or other *kustomizations*) - Somewhat integrated with `kubectl` (but only "somewhat" because of version discrepancies) - Less complex than e.g. Helm, but also less powerful - No central index like the Artifact Hub (but is there a need for it?) .debug[[k8s/kustomize.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/kustomize.md)] --- ## Kustomize in a nutshell - Get some valid YAML (our "resources") - Write a *kustomization* (technically, a file named `kustomization.yaml`) - reference our resources - reference other kustomizations - add some *patches* - ... - Use that kustomization either with `kustomize build` or `kubectl apply -k` - Write new kustomizations referencing the first one to handle minor differences .debug[[k8s/kustomize.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/kustomize.md)] --- ## A simple kustomization This features a Deployment, Service, and Ingress (in separate files), and a couple of patches (to change the number of replicas and the hostname used in the Ingress). ```yaml apiVersion: kustomize.config.k8s.io/v1beta1 kind: Kustomization patchesStrategicMerge: - scale-deployment.yaml - ingress-hostname.yaml resources: - deployment.yaml - service.yaml - ingress.yaml ``` On the next slide, let's see a more complex example ... .debug[[k8s/kustomize.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/kustomize.md)] --- ## A more complex Kustomization .small[ ```yaml apiVersion: kustomize.config.k8s.io/v1beta1 kind: Kustomization commonAnnotations: mood: 😎 commonLabels: add-this-to-all-my-resources: please namePrefix: prod- patchesStrategicMerge: - prod-scaling.yaml - prod-healthchecks.yaml bases: - api/ - frontend/ - db/ - github.com/example/app?ref=tag-or-branch resources: - ingress.yaml - permissions.yaml configMapGenerator: - name: appconfig files: - global.conf - local.conf=prod.conf ``` ] .debug[[k8s/kustomize.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/kustomize.md)] --- ## Glossary - A *base* is a kustomization that is referred to by other kustomizations - An *overlay* is a kustomization that refers to other kustomizations - A kustomization can be both a base and an overlay at the same time (a kustomization can refer to another, which can refer to a third) - A *patch* describes how to alter an existing resource (e.g. to change the image in a Deployment; or scaling parameters; etc.) - A *variant* is the final outcome of applying bases + overlays (See the [kustomize glossary][glossary] for more definitions!) [glossary]: https://kubectl.docs.kubernetes.io/references/kustomize/glossary/ .debug[[k8s/kustomize.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/kustomize.md)] --- ## What Kustomize *cannot* do - By design, there are a number of things that Kustomize won't do - For instance: - using command-line arguments or environment variables to generate a variant - overlays can only *add* resources, not *remove* them - See the full list of [eschewed features](https://kubectl.docs.kubernetes.io/faq/kustomize/eschewedfeatures/) for more details .debug[[k8s/kustomize.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/kustomize.md)] --- ## Kustomize workflows - The Kustomize documentation proposes two different workflows - *Bespoke configuration* - base and overlays managed by the same team - *Off-the-shelf configuration* (OTS) - base and overlays managed by different teams - base is regularly updated by "upstream" (e.g. a vendor) - our overlays and patches should (hopefully!) apply cleanly - we may regularly update the base, or use a remote base .debug[[k8s/kustomize.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/kustomize.md)] --- ## Remote bases - Kustomize can also use bases that are remote git repositories - Examples: github.com/jpetazzo/kubercoins (remote git repository) github.com/jpetazzo/kubercoins?ref=kustomize (specific tag or branch) - Note that this only works for kustomizations, not individual resources (the specified repository or directory must contain a `kustomization.yaml` file) .debug[[k8s/kustomize.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/kustomize.md)] --- class: extra-details ## Hashicorp go-getter - Some versions of Kustomize support additional forms for remote resources - Examples: https://releases.hello.io/k/1.0.zip (remote archive) https://releases.hello.io/k/1.0.zip//some-subdir (subdirectory in archive) - This relies on [hashicorp/go-getter](https://github.com/hashicorp/go-getter#url-format) - ... But it prevents Kustomize inclusion in `kubectl` - Avoid them! - See [kustomize#3578](https://github.com/kubernetes-sigs/kustomize/issues/3578) for details .debug[[k8s/kustomize.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/kustomize.md)] --- ## Managing `kustomization.yaml` - There are many ways to manage `kustomization.yaml` files, including: - the `kustomize` CLI - opening the file with our favorite text editor - ~~web wizards like [Replicated Ship](https://www.replicated.com/ship/)~~ (deprecated) - Let's see these in action! .debug[[k8s/kustomize.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/kustomize.md)] --- ## Working with the `kustomize` CLI General workflow: 1. `kustomize create` to generate an empty `kustomization.yaml` file 2. `kustomize edit add resource` to add Kubernetes YAML files to it 3. `kustomize edit add patch` to add patches to said resources 4. `kustomize edit add ...` or `kustomize edit set ...` (many options!) 5. `kustomize build | kubectl apply -f-` or `kubectl apply -k .` 6. Repeat steps 4-5 as many times as necessary! .debug[[k8s/kustomize.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/kustomize.md)] --- ## Why work with the CLI? - Editing manually can introduce errors and typos - With the CLI, we don't need to remember the name of all the options and parameters (just add `--help` after any command to see possible options!) - Make sure to install the completion and try e.g. `kustomize edit add [TAB][TAB]` .debug[[k8s/kustomize.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/kustomize.md)] --- ## `kustomize create` .lab[ - Change to a new directory: ```bash mkdir ~/kustomcoins cd ~/kustomcoins ``` - Run `kustomize create` with the kustomcoins repository: ```bash kustomize create --resources https://github.com/jpetazzo/kubercoins ``` <!-- ```look at the files``` --> - Run `kustomize build | kubectl apply -f-` ] .debug[[k8s/kustomize.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/kustomize.md)] --- ## `kubectl` integration - Kustomize has been integrated in `kubectl` (since Kubernetes 1.14) - `kubectl kustomize` is an equivalent to `kustomize build` - commands that use `-f` can also use `-k` (`kubectl apply`/`delete`/...) - The `kustomize` tool is still needed if we want to use `create`, `edit`, ... - Kubernetes 1.14 to 1.20 uses Kustomize 2.0.3 - Kubernetes 1.21 jumps to Kustomize 4.1.2 - Future versions should track Kustomize updates more closely .debug[[k8s/kustomize.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/kustomize.md)] --- class: extra-details ## Differences between 2.0.3 and later - Kustomize 2.1 / 3.0 deprecates `bases` (they should be listed in `resources`) (this means that "modern" `kustomize edit add resource` won't work with "old" `kubectl apply -k`) - Kustomize 2.1 introduces `replicas` and `envs` - Kustomize 3.1 introduces multipatches - Kustomize 3.2 introduce inline patches in `kustomization.yaml` - Kustomize 3.3 to 3.10 is mostly internal refactoring - Kustomize 4.0 drops go-getter again - Kustomize 4.1 allows patching kind and name .debug[[k8s/kustomize.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/kustomize.md)] --- ## Adding labels Labels can be added to all resources liks this: ```yaml apiVersion: kustomize.config.k8s.io/v1beta1 kind: Kustomization ... commonLabels: app.kubernetes.io/name: dockercoins ``` Or with the equivalent CLI command: ```bash kustomize edit add label app.kubernetes.io/name:dockercoins ``` .debug[[k8s/kustomize.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/kustomize.md)] --- ## Use cases for labels - Example: clean up components that have been removed from the kustomization - Assuming that `commonLabels` have been set as shown on the previous slide: ```bash kubectl apply -k . --prune --selector app.kubernetes.io/name=dockercoins ``` - ... This command removes resources that have been removed from the kustomization - Technically, resources with: - a `kubectl.kubernetes.io/last-applied-configuration` annotation - labels matching the given selector .debug[[k8s/kustomize.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/kustomize.md)] --- ## Scaling Instead of using a patch, scaling can be done like this: ```yaml apiVersion: kustomize.config.k8s.io/v1beta1 kind: Kustomization ... replicas: - name: worker count: 5 ``` or the CLI equivalent: ```bash kustomize edit set replicas worker=5 ``` It will automatically work with Deployments, ReplicaSets, StatefulSets. (For other resource types, fall back to a patch.) .debug[[k8s/kustomize.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/kustomize.md)] --- ## Updating images Instead of using patches, images can be changed like this: ```yaml apiVersion: kustomize.config.k8s.io/v1beta1 kind: Kustomization ... images: - name: postgres newName: harbor.enix.io/my-postgres - name: dockercoins/worker newTag: v0.2 - name: dockercoins/hasher newName: registry.dockercoins.io/hasher newTag: v0.2 - name: alpine digest: sha256:24a0c4b4a4c0eb97a1aabb8e29f18e917d05abfe1b7a7c07857230879ce7d3d3 ``` .debug[[k8s/kustomize.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/kustomize.md)] --- ## Updating images with the CLI To add an entry in the `images:` section of the kustomization: ```bash kustomize edit set image name=[newName][:newTag][@digest] ``` - `[]` denote optional parameters - `:` and `@` are the delimiters used to indicate a field Examples: ```bash kustomize edit set image dockercoins/worker=ghcr.io/dockercoins/worker kustomize edit set image dockercoins/worker=ghcr.io/dockercoins/worker:v0.2 kustomize edit set image dockercoins/worker=:v0.2 ``` .debug[[k8s/kustomize.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/kustomize.md)] --- ## Updating images, pros and cons - Very convenient when the same image appears multiple times - Very convenient to define tags (or pin to hashes) outside of the main YAML - Doesn't support wildcard or generic substitutions: - cannot "replace `dockercoins/*` with `ghcr.io/dockercoins/*`" - cannot "tag all `dockercoins/*` with `v0.2`" - Only patches "well-known" image fields (won't work with CRDs referencing images) - Helm can deal with these scenarios, for instance: ```yaml image: {{ .Values.registry }}/worker:{{ .Values.version }} ``` .debug[[k8s/kustomize.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/kustomize.md)] --- ## Advanced resource patching The example below shows how to: - patch multiple resources with a selector (new in Kustomize 3.1) - use an inline patch instead of a separate patch file (new in Kustomize 3.2) ```yaml apiVersion: kustomize.config.k8s.io/v1beta1 kind: Kustomization ... patches: - patch: |- - op: replace path: /spec/template/spec/containers/0/image value: alpine target: kind: Deployment labelSelector: "app" ``` (This replaces all images of Deployments matching the `app` selector with `alpine`.) .debug[[k8s/kustomize.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/kustomize.md)] --- ## Advanced resource patching, pros and cons - Very convenient to patch an arbitrary number of resources - Very convenient to patch any kind of resource, including CRDs - Doesn't support "fine-grained" patching (e.g. image registry or tag) - Once again, Helm can do it: ```yaml image: {{ .Values.registry }}/worker:{{ .Values.version }} ``` .debug[[k8s/kustomize.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/kustomize.md)] --- ## Differences with Helm - Helm charts generally require more upfront work (while kustomize "bases" are standard Kubernetes YAML) - ... But Helm charts are also more powerful; their templating language can: - conditionally include/exclude resources or blocks within resources - generate values by concatenating, hashing, transforming parameters - generate values or resources by iteration (`{{ range ... }}`) - access the Kubernetes API during template evaluation - [and much more](https://helm.sh/docs/chart_template_guide/) ??? :EN:- Packaging and running apps with Kustomize :FR:- *Packaging* d'applications avec Kustomize .debug[[k8s/kustomize.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/kustomize.md)] --- class: pic .interstitial[] --- name: toc-managing-stacks-with-helm class: title Managing stacks with Helm .nav[ [Previous part](#toc-kustomize) | [Back to table of contents](#toc-part-1) | [Next part](#toc-helm-chart-format) ] .debug[(automatically generated title slide)] --- # Managing stacks with Helm - Helm is a (kind of!) package manager for Kubernetes - We can use it to: - find existing packages (called "charts") created by other folks - install these packages, configuring them for our particular setup - package our own things (for distribution or for internal use) - manage the lifecycle of these installs (rollback to previous version etc.) - It's a "CNCF graduate project", indicating a certain level of maturity (more on that later) .debug[[k8s/helm-intro.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-intro.md)] --- ## From `kubectl run` to YAML - We can create resources with one-line commands (`kubectl run`, `kubectl create deployment`, `kubectl expose`...) - We can also create resources by loading YAML files (with `kubectl apply -f`, `kubectl create -f`...) - There can be multiple resources in a single YAML files (making them convenient to deploy entire stacks) - However, these YAML bundles often need to be customized (e.g.: number of replicas, image version to use, features to enable...) .debug[[k8s/helm-intro.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-intro.md)] --- ## Beyond YAML - Very often, after putting together our first `app.yaml`, we end up with: - `app-prod.yaml` - `app-staging.yaml` - `app-dev.yaml` - instructions indicating to users "please tweak this and that in the YAML" - That's where using something like [CUE](https://github.com/cue-labs/cue-by-example/tree/main/003_kubernetes_tutorial), [Kustomize](https://kustomize.io/), or [Helm](https://helm.sh/) can help! - Now we can do something like this: ```bash helm install app ... --set this.parameter=that.value ``` .debug[[k8s/helm-intro.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-intro.md)] --- ## Other features of Helm - With Helm, we create "charts" - These charts can be used internally or distributed publicly - Public charts can be indexed through the [Artifact Hub](https://artifacthub.io/) - This gives us a way to find and install other folks' charts - Helm also gives us ways to manage the lifecycle of what we install: - keep track of what we have installed - upgrade versions, change parameters, roll back, uninstall - Furthermore, even if it's not "the" standard, it's definitely "a" standard! .debug[[k8s/helm-intro.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-intro.md)] --- ## CNCF graduation status - On April 30th 2020, Helm was the 10th project to *graduate* within the CNCF (alongside Containerd, Prometheus, and Kubernetes itself) - This is an acknowledgement by the CNCF for projects that *demonstrate thriving adoption, an open governance process, <br/> and a strong commitment to community, sustainability, and inclusivity.* - See [CNCF announcement](https://www.cncf.io/announcement/2020/04/30/cloud-native-computing-foundation-announces-helm-graduation/) and [Helm announcement](https://helm.sh/blog/celebrating-helms-cncf-graduation/) - In other words: Helm is here to stay .debug[[k8s/helm-intro.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-intro.md)] --- ## Helm concepts - `helm` is a CLI tool - It is used to find, install, upgrade *charts* - A chart is an archive containing templatized YAML bundles - Charts are versioned - Charts can be stored on private or public repositories .debug[[k8s/helm-intro.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-intro.md)] --- ## Differences between charts and packages - A package (deb, rpm...) contains binaries, libraries, etc. - A chart contains YAML manifests (the binaries, libraries, etc. are in the images referenced by the chart) - On most distributions, a package can only be installed once (installing another version replaces the installed one) - A chart can be installed multiple times - Each installation is called a *release* - This allows to install e.g. 10 instances of MongoDB (with potentially different versions and configurations) .debug[[k8s/helm-intro.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-intro.md)] --- class: extra-details ## Wait a minute ... *But, on my Debian system, I have Python 2 **and** Python 3. <br/> Also, I have multiple versions of the Postgres database engine!* Yes! But they have different package names: - `python2.7`, `python3.8` - `postgresql-10`, `postgresql-11` Good to know: the Postgres package in Debian includes provisions to deploy multiple Postgres servers on the same system, but it's an exception (and it's a lot of work done by the package maintainer, not by the `dpkg` or `apt` tools). .debug[[k8s/helm-intro.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-intro.md)] --- ## Helm 2 vs Helm 3 - Helm 3 was released [November 13, 2019](https://helm.sh/blog/helm-3-released/) - Charts remain compatible between Helm 2 and Helm 3 - The CLI is very similar (with minor changes to some commands) - The main difference is that Helm 2 uses `tiller`, a server-side component - Helm 3 doesn't use `tiller` at all, making it simpler (yay!) - If you see references to `tiller` in a tutorial, documentation... that doc is obsolete! .debug[[k8s/helm-intro.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-intro.md)] --- class: extra-details ## What was the problem with `tiller`? - With Helm 3: - the `helm` CLI communicates directly with the Kubernetes API - it creates resources (deployments, services...) with our credentials - With Helm 2: - the `helm` CLI communicates with `tiller`, telling `tiller` what to do - `tiller` then communicates with the Kubernetes API, using its own credentials - This indirect model caused significant permissions headaches - It also made it more complicated to embed Helm in other tools .debug[[k8s/helm-intro.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-intro.md)] --- ## Installing Helm - If the `helm` CLI is not installed in your environment, install it .lab[ - Check if `helm` is installed: ```bash helm ``` - If it's not installed, run the following command: ```bash curl https://raw.githubusercontent.com/kubernetes/helm/master/scripts/get-helm-3 \ | bash ``` ] (To install Helm 2, replace `get-helm-3` with `get`.) .debug[[k8s/helm-intro.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-intro.md)] --- ## Charts and repositories - A *repository* (or repo in short) is a collection of charts - It's just a bunch of files (they can be hosted by a static HTTP server, or on a local directory) - We can add "repos" to Helm, giving them a nickname - The nickname is used when referring to charts on that repo (for instance, if we try to install `hello/world`, that means the chart `world` on the repo `hello`; and that repo `hello` might be something like https://blahblah.hello.io/charts/) .debug[[k8s/helm-intro.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-intro.md)] --- ## How to find charts - Go to the [Artifact Hub](https://artifacthub.io/packages/search?kind=0) (https://artifacthub.io) - Or use `helm search hub ...` from the CLI - Let's try to find a Helm chart for something called "OWASP Juice Shop"! (it is a famous demo app used in security challenges) .debug[[k8s/helm-intro.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-intro.md)] --- ## Finding charts from the CLI - We can use `helm search hub <keyword>` .lab[ - Look for the OWASP Juice Shop app: ```bash helm search hub owasp juice ``` - Since the URLs are truncated, try with the YAML output: ```bash helm search hub owasp juice -o yaml ``` ] Then go to → https://artifacthub.io/packages/helm/seccurecodebox/juice-shop .debug[[k8s/helm-intro.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-intro.md)] --- ## Finding charts on the web - We can also use the Artifact Hub search feature .lab[ - Go to https://artifacthub.io/ - In the search box on top, enter "owasp juice" - Click on the "juice-shop" result (not "multi-juicer" or "juicy-ctf") ] .debug[[k8s/helm-intro.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-intro.md)] --- ## Installing the chart - Click on the "Install" button, it will show instructions .lab[ - First, add the repository for that chart: ```bash helm repo add juice https://charts.securecodebox.io ``` - Then, install the chart: ```bash helm install my-juice-shop juice/juice-shop ``` ] Note: it is also possible to install directly a chart, with `--repo https://...` .debug[[k8s/helm-intro.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-intro.md)] --- ## Charts and releases - "Installing a chart" means creating a *release* - In the previous example, the release was named "my-juice-shop" - We can also use `--generate-name` to ask Helm to generate a name for us .lab[ - List the releases: ```bash helm list ``` - Check that we have a `my-juice-shop-...` Pod up and running: ```bash kubectl get pods ``` ] .debug[[k8s/helm-intro.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-intro.md)] --- ## Viewing resources of a release - This specific chart labels all its resources with a `release` label - We can use a selector to see these resources .lab[ - List all the resources created by this release: ```bash kubectl get all --selector=app.kubernetes.io/instance=my-juice-shop ``` ] Note: this label wasn't added automatically by Helm. <br/> It is defined in that chart. In other words, not all charts will provide this label. .debug[[k8s/helm-intro.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-intro.md)] --- ## Configuring a release - By default, `juice/juice-shop` creates a service of type `ClusterIP` - We would like to change that to a `NodePort` - We could use `kubectl edit service my-juice-shop`, but ... ... our changes would get overwritten next time we update that chart! - Instead, we are going to *set a value* - Values are parameters that the chart can use to change its behavior - Values have default values - Each chart is free to define its own values and their defaults .debug[[k8s/helm-intro.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-intro.md)] --- ## Checking possible values - We can inspect a chart with `helm show` or `helm inspect` .lab[ - Look at the README for the app: ```bash helm show readme juice/juice-shop ``` - Look at the values and their defaults: ```bash helm show values juice/juice-shop ``` ] The `values` may or may not have useful comments. The `readme` may or may not have (accurate) explanations for the values. (If we're unlucky, there won't be any indication about how to use the values!) .debug[[k8s/helm-intro.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-intro.md)] --- ## Setting values - Values can be set when installing a chart, or when upgrading it - We are going to update `my-juice-shop` to change the type of the service .lab[ - Update `my-juice-shop`: ```bash helm upgrade my-juice-shop juice/juice-shop \ --set service.type=NodePort ``` ] Note that we have to specify the chart that we use (`juice/my-juice-shop`), even if we just want to update some values. We can set multiple values. If we want to set many values, we can use `-f`/`--values` and pass a YAML file with all the values. All unspecified values will take the default values defined in the chart. .debug[[k8s/helm-intro.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-intro.md)] --- ## Connecting to the Juice Shop - Let's check the app that we just installed .lab[ - Check the node port allocated to the service: ```bash kubectl get service my-juice-shop PORT=$(kubectl get service my-juice-shop -o jsonpath={..nodePort}) ``` - Connect to it: ```bash curl localhost:$PORT/ ``` ] ??? :EN:- Helm concepts :EN:- Installing software with Helm :EN:- Finding charts on the Artifact Hub :FR:- Fonctionnement général de Helm :FR:- Installer des composants via Helm :FR:- Trouver des *charts* sur *Artifact Hub* :T: Getting started with Helm and its concepts :Q: Which comparison is the most adequate? :A: Helm is a firewall, charts are access lists :A: ✔️Helm is a package manager, charts are packages :A: Helm is an artefact repository, charts are artefacts :A: Helm is a CI/CD platform, charts are CI/CD pipelines :Q: What's required to distribute a Helm chart? :A: A Helm commercial license :A: A Docker registry :A: An account on the Helm Hub :A: ✔️An HTTP server .debug[[k8s/helm-intro.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-intro.md)] --- class: pic .interstitial[] --- name: toc-helm-chart-format class: title Helm chart format .nav[ [Previous part](#toc-managing-stacks-with-helm) | [Back to table of contents](#toc-part-1) | [Next part](#toc-creating-a-basic-chart) ] .debug[(automatically generated title slide)] --- # Helm chart format - What exactly is a chart? - What's in it? - What would be involved in creating a chart? (we won't create a chart, but we'll see the required steps) .debug[[k8s/helm-chart-format.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-chart-format.md)] --- ## What is a chart - A chart is a set of files - Some of these files are mandatory for the chart to be viable (more on that later) - These files are typically packed in a tarball - These tarballs are stored in "repos" (which can be static HTTP servers) - We can install from a repo, from a local tarball, or an unpacked tarball (the latter option is preferred when developing a chart) .debug[[k8s/helm-chart-format.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-chart-format.md)] --- ## What's in a chart - A chart must have at least: - a `templates` directory, with YAML manifests for Kubernetes resources - a `values.yaml` file, containing (tunable) parameters for the chart - a `Chart.yaml` file, containing metadata (name, version, description ...) - Let's look at a simple chart for a basic demo app .debug[[k8s/helm-chart-format.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-chart-format.md)] --- ## Adding the repo - If you haven't done it before, you need to add the repo for that chart .lab[ - Add the repo that holds the chart for the OWASP Juice Shop: ```bash helm repo add juice https://charts.securecodebox.io ``` ] .debug[[k8s/helm-chart-format.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-chart-format.md)] --- ## Downloading a chart - We can use `helm pull` to download a chart from a repo .lab[ - Download the tarball for `juice/juice-shop`: ```bash helm pull juice/juice-shop ``` (This will create a file named `juice-shop-X.Y.Z.tgz`.) - Or, download + untar `juice/juice-shop`: ```bash helm pull juice/juice-shop --untar ``` (This will create a directory named `juice-shop`.) ] .debug[[k8s/helm-chart-format.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-chart-format.md)] --- ## Looking at the chart's content - Let's look at the files and directories in the `juice-shop` chart .lab[ - Display the tree structure of the chart we just downloaded: ```bash tree juice-shop ``` ] We see the components mentioned above: `Chart.yaml`, `templates/`, `values.yaml`. .debug[[k8s/helm-chart-format.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-chart-format.md)] --- ## Templates - The `templates/` directory contains YAML manifests for Kubernetes resources (Deployments, Services, etc.) - These manifests can contain template tags (using the standard Go template library) .lab[ - Look at the template file for the Service resource: ```bash cat juice-shop/templates/service.yaml ``` ] .debug[[k8s/helm-chart-format.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-chart-format.md)] --- ## Analyzing the template file - Tags are identified by `{{ ... }}` - `{{ template "x.y" }}` expands a [named template](https://helm.sh/docs/chart_template_guide/named_templates/#declaring-and-using-templates-with-define-and-template) (previously defined with `{{ define "x.y" }}...stuff...{{ end }}`) - The `.` in `{{ template "x.y" . }}` is the *context* for that named template (so that the named template block can access variables from the local context) - `{{ .Release.xyz }}` refers to [built-in variables](https://helm.sh/docs/chart_template_guide/builtin_objects/) initialized by Helm (indicating the chart name, version, whether we are installing or upgrading ...) - `{{ .Values.xyz }}` refers to tunable/settable [values](https://helm.sh/docs/chart_template_guide/values_files/) (more on that in a minute) .debug[[k8s/helm-chart-format.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-chart-format.md)] --- ## Values - Each chart comes with a [values file](https://helm.sh/docs/chart_template_guide/values_files/) - It's a YAML file containing a set of default parameters for the chart - The values can be accessed in templates with e.g. `{{ .Values.x.y }}` (corresponding to field `y` in map `x` in the values file) - The values can be set or overridden when installing or ugprading a chart: - with `--set x.y=z` (can be used multiple times to set multiple values) - with `--values some-yaml-file.yaml` (set a bunch of values from a file) - Charts following best practices will have values following specific patterns (e.g. having a `service` map allowing to set `service.type` etc.) .debug[[k8s/helm-chart-format.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-chart-format.md)] --- ## Other useful tags - `{{ if x }} y {{ end }}` allows to include `y` if `x` evaluates to `true` (can be used for e.g. healthchecks, annotations, or even an entire resource) - `{{ range x }} y {{ end }}` iterates over `x`, evaluating `y` each time (the elements of `x` are assigned to `.` in the range scope) - `{{- x }}`/`{{ x -}}` will remove whitespace on the left/right - The whole [Sprig](http://masterminds.github.io/sprig/) library, with additions: `lower` `upper` `quote` `trim` `default` `b64enc` `b64dec` `sha256sum` `indent` `toYaml` ... .debug[[k8s/helm-chart-format.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-chart-format.md)] --- ## Pipelines - `{{ quote blah }}` can also be expressed as `{{ blah | quote }}` - With multiple arguments, `{{ x y z }}` can be expressed as `{{ z | x y }}`) - Example: `{{ .Values.annotations | toYaml | indent 4 }}` - transforms the map under `annotations` into a YAML string - indents it with 4 spaces (to match the surrounding context) - Pipelines are not specific to Helm, but a feature of Go templates (check the [Go text/template documentation](https://golang.org/pkg/text/template/) for more details and examples) .debug[[k8s/helm-chart-format.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-chart-format.md)] --- ## README and NOTES.txt - At the top-level of the chart, it's a good idea to have a README - It will be viewable with e.g. `helm show readme juice/juice-shop` - In the `templates/` directory, we can also have a `NOTES.txt` file - When the template is installed (or upgraded), `NOTES.txt` is processed too (i.e. its `{{ ... }}` tags are evaluated) - It gets displayed after the install or upgrade - It's a great place to generate messages to tell the user: - how to connect to the release they just deployed - any passwords or other thing that we generated for them .debug[[k8s/helm-chart-format.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-chart-format.md)] --- ## Additional files - We can place arbitrary files in the chart (outside of the `templates/` directory) - They can be accessed in templates with `.Files` - They can be transformed into ConfigMaps or Secrets with `AsConfig` and `AsSecrets` (see [this example](https://helm.sh/docs/chart_template_guide/accessing_files/#configmap-and-secrets-utility-functions) in the Helm docs) .debug[[k8s/helm-chart-format.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-chart-format.md)] --- ## Hooks and tests - We can define *hooks* in our templates - Hooks are resources annotated with `"helm.sh/hook": NAME-OF-HOOK` - Hook names include `pre-install`, `post-install`, `test`, [and much more](https://helm.sh/docs/topics/charts_hooks/#the-available-hooks) - The resources defined in hooks are loaded at a specific time - Hook execution is *synchronous* (if the resource is a Job or Pod, Helm will wait for its completion) - This can be use for database migrations, backups, notifications, smoke tests ... - Hooks named `test` are executed only when running `helm test RELEASE-NAME` ??? :EN:- Helm charts format :FR:- Le format des *Helm charts* .debug[[k8s/helm-chart-format.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-chart-format.md)] --- class: pic .interstitial[] --- name: toc-creating-a-basic-chart class: title Creating a basic chart .nav[ [Previous part](#toc-helm-chart-format) | [Back to table of contents](#toc-part-1) | [Next part](#toc-exercise--helm-charts) ] .debug[(automatically generated title slide)] --- # Creating a basic chart - We are going to show a way to create a *very simplified* chart - In a real chart, *lots of things* would be templatized (Resource names, service types, number of replicas...) .lab[ - Create a sample chart: ```bash helm create dockercoins ``` - Move away the sample templates and create an empty template directory: ```bash mv dockercoins/templates dockercoins/default-templates mkdir dockercoins/templates ``` ] .debug[[k8s/helm-create-basic-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-basic-chart.md)] --- ## Adding the manifests of our app - There is a convenient `dockercoins.yml` in the repo .lab[ - Copy the YAML file to the `templates` subdirectory in the chart: ```bash cp ~/container.training/k8s/dockercoins.yaml dockercoins/templates ``` ] - Note: it is probably easier to have multiple YAML files (rather than a single, big file with all the manifests) - But that works too! .debug[[k8s/helm-create-basic-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-basic-chart.md)] --- ## Testing our Helm chart - Our Helm chart is now ready (as surprising as it might seem!) .lab[ - Let's try to install the chart: ``` helm install helmcoins dockercoins ``` (`helmcoins` is the name of the release; `dockercoins` is the local path of the chart) ] -- - If the application is already deployed, this will fail: ``` Error: rendered manifests contain a resource that already exists. Unable to continue with install: existing resource conflict: kind: Service, namespace: default, name: hasher ``` .debug[[k8s/helm-create-basic-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-basic-chart.md)] --- ## Switching to another namespace - If there is already a copy of dockercoins in the current namespace: - we can switch with `kubens` or `kubectl config set-context` - we can also tell Helm to use a different namespace .lab[ - Create a new namespace: ```bash kubectl create namespace helmcoins ``` - Deploy our chart in that namespace: ```bash helm install helmcoins dockercoins --namespace=helmcoins ``` ] .debug[[k8s/helm-create-basic-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-basic-chart.md)] --- ## Helm releases are namespaced - Let's try to see the release that we just deployed .lab[ - List Helm releases: ```bash helm list ``` ] Our release doesn't show up! We have to specify its namespace (or switch to that namespace). .debug[[k8s/helm-create-basic-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-basic-chart.md)] --- ## Specifying the namespace - Try again, with the correct namespace .lab[ - List Helm releases in `helmcoins`: ```bash helm list --namespace=helmcoins ``` ] .debug[[k8s/helm-create-basic-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-basic-chart.md)] --- ## Checking our new copy of DockerCoins - We can check the worker logs, or the web UI .lab[ - Retrieve the NodePort number of the web UI: ```bash kubectl get service webui --namespace=helmcoins ``` - Open it in a web browser - Look at the worker logs: ```bash kubectl logs deploy/worker --tail=10 --follow --namespace=helmcoins ``` ] Note: it might take a minute or two for the worker to start. .debug[[k8s/helm-create-basic-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-basic-chart.md)] --- ## Discussion, shortcomings - Helm (and Kubernetes) best practices recommend to add a number of annotations (e.g. `app.kubernetes.io/name`, `helm.sh/chart`, `app.kubernetes.io/instance` ...) - Our basic chart doesn't have any of these - Our basic chart doesn't use any template tag - Does it make sense to use Helm in that case? - *Yes,* because Helm will: - track the resources created by the chart - save successive revisions, allowing us to rollback [Helm docs](https://helm.sh/docs/topics/chart_best_practices/labels/) and [Kubernetes docs](https://kubernetes.io/docs/concepts/overview/working-with-objects/common-labels/) have details about recommended annotations and labels. .debug[[k8s/helm-create-basic-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-basic-chart.md)] --- ## Cleaning up - Let's remove that chart before moving on .lab[ - Delete the release (don't forget to specify the namespace): ```bash helm delete helmcoins --namespace=helmcoins ``` ] .debug[[k8s/helm-create-basic-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-basic-chart.md)] --- ## Tips when writing charts - It is not necessary to `helm install`/`upgrade` to test a chart - If we just want to look at the generated YAML, use `helm template`: ```bash helm template ./my-chart helm template release-name ./my-chart ``` - Of course, we can use `--set` and `--values` too - Note that this won't fully validate the YAML! (e.g. if there is `apiVersion: klingon` it won't complain) - This can be used when trying things out .debug[[k8s/helm-create-basic-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-basic-chart.md)] --- ## Exploring the templating system Try to put something like this in a file in the `templates` directory: ```yaml hello: {{ .Values.service.port }} comment: {{/* something completely.invalid !!! */}} type: {{ .Values.service | typeOf | printf }} ### print complex value {{ .Values.service | toYaml }} ### indent it indented: {{ .Values.service | toYaml | indent 2 }} ``` Then run `helm template`. The result is not a valid YAML manifest, but this is a great debugging tool! ??? :EN:- Writing a basic Helm chart for the whole app :FR:- Écriture d'un *chart* Helm simplifié .debug[[k8s/helm-create-basic-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-basic-chart.md)] --- class: pic .interstitial[] --- name: toc-exercise--helm-charts class: title Exercise — Helm Charts .nav[ [Previous part](#toc-creating-a-basic-chart) | [Back to table of contents](#toc-part-1) | [Next part](#toc-creating-better-helm-charts) ] .debug[(automatically generated title slide)] --- # Exercise — Helm Charts - We want to deploy dockercoins with a Helm chart - We want to have a "generic chart" and instantiate it 5 times (once for each service) - We will pass values to the chart to customize it for each component (to indicate which image to use, which ports to expose, etc.) - We'll use `helm create` as a starting point for our generic chart .debug[[exercises/helm-generic-chart-details.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/exercises/helm-generic-chart-details.md)] --- ## Goal - Have a directory with the generic chart (e.g. `generic-chart`) - Have 5 value files (e.g. `hasher.yml`, `redis.yml`, `rng.yml`, `webui.yml`, `worker.yml`) - Be able to install dockercoins by running 5 times: `helm install X ./generic-chart --values=X.yml` .debug[[exercises/helm-generic-chart-details.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/exercises/helm-generic-chart-details.md)] --- ## Hints - There are many little things to tweak in the generic chart (service names, port numbers, healthchecks...) - Check the training slides if you need a refresher! .debug[[exercises/helm-generic-chart-details.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/exercises/helm-generic-chart-details.md)] --- ## Bonus 1 - Minimize the amount of values that have to be set - Option 1: no values at all for `rng` and `hasher` (default values assume HTTP service listening on port 80) - Option 2: no values at all for `worker` (default values assume worker container with no service) .debug[[exercises/helm-generic-chart-details.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/exercises/helm-generic-chart-details.md)] --- ## Bonus 2 - Handle healthchecks - Make sure that healthchecks are enabled in HTTP services - ...But not in Redis or in the worker .debug[[exercises/helm-generic-chart-details.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/exercises/helm-generic-chart-details.md)] --- ## Bonus 3 - Make it easy to change image versions - E.g. change `v0.1` to `v0.2` by changing only *one* thing in *one* place .debug[[exercises/helm-generic-chart-details.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/exercises/helm-generic-chart-details.md)] --- ## Bonus 4 - Make it easy to use images on a different registry - We can assume that the images will always have the same names (`hasher`, `rng`, `webui`, `worker`) - And the same tag (`v0.1`) .debug[[exercises/helm-generic-chart-details.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/exercises/helm-generic-chart-details.md)] --- class: pic .interstitial[] --- name: toc-creating-better-helm-charts class: title Creating better Helm charts .nav[ [Previous part](#toc-exercise--helm-charts) | [Back to table of contents](#toc-part-2) | [Next part](#toc-charts-using-other-charts) ] .debug[(automatically generated title slide)] --- # Creating better Helm charts - We are going to create a chart with the helper `helm create` - This will give us a chart implementing lots of Helm best practices (labels, annotations, structure of the `values.yaml` file ...) - We will use that chart as a generic Helm chart - We will use it to deploy DockerCoins - Each component of DockerCoins will have its own *release* - In other words, we will "install" that Helm chart multiple times (one time per component of DockerCoins) .debug[[k8s/helm-create-better-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-better-chart.md)] --- ## Creating a generic chart - Rather than starting from scratch, we will use `helm create` - This will give us a basic chart that we will customize .lab[ - Create a basic chart: ```bash cd ~ helm create helmcoins ``` ] This creates a basic chart in the directory `helmcoins`. .debug[[k8s/helm-create-better-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-better-chart.md)] --- ## What's in the basic chart? - The basic chart will create a Deployment and a Service - Optionally, it will also include an Ingress - If we don't pass any values, it will deploy the `nginx` image - We can override many things in that chart - Let's try to deploy DockerCoins components with that chart! .debug[[k8s/helm-create-better-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-better-chart.md)] --- ## Writing `values.yaml` for our components - We need to write one `values.yaml` file for each component (hasher, redis, rng, webui, worker) - We will start with the `values.yaml` of the chart, and remove what we don't need - We will create 5 files: hasher.yaml, redis.yaml, rng.yaml, webui.yaml, worker.yaml - In each file, we want to have: ```yaml image: repository: IMAGE-REPOSITORY-NAME tag: IMAGE-TAG ``` .debug[[k8s/helm-create-better-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-better-chart.md)] --- ## Getting started - For component X, we want to use the image dockercoins/X:v0.1 (for instance, for rng, we want to use the image dockercoins/rng:v0.1) - Exception: for redis, we want to use the official image redis:latest .lab[ - Write YAML files for the 5 components, with the following model: ```yaml image: repository: `IMAGE-REPOSITORY-NAME` (e.g. dockercoins/worker) tag: `IMAGE-TAG` (e.g. v0.1) ``` ] .debug[[k8s/helm-create-better-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-better-chart.md)] --- ## Deploying DockerCoins components - For convenience, let's work in a separate namespace .lab[ - Create a new namespace (if it doesn't already exist): ```bash kubectl create namespace helmcoins ``` - Switch to that namespace: ```bash kns helmcoins ``` ] .debug[[k8s/helm-create-better-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-better-chart.md)] --- ## Deploying the chart - To install a chart, we can use the following command: ```bash helm install COMPONENT-NAME CHART-DIRECTORY ``` - We can also use the following command, which is *idempotent*: ```bash helm upgrade COMPONENT-NAME CHART-DIRECTORY --install ``` .lab[ - Install the 5 components of DockerCoins: ```bash for COMPONENT in hasher redis rng webui worker; do helm upgrade $COMPONENT helmcoins --install --values=$COMPONENT.yaml done ``` ] .debug[[k8s/helm-create-better-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-better-chart.md)] --- class: extra-details ## "Idempotent" - Idempotent = that can be applied multiple times without changing the result (the word is commonly used in maths and computer science) - In this context, this means: - if the action (installing the chart) wasn't done, do it - if the action was already done, don't do anything - Ideally, when such an action fails, it can be retried safely (as opposed to, e.g., installing a new release each time we run it) - Other example: `kubectl apply -f some-file.yaml` .debug[[k8s/helm-create-better-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-better-chart.md)] --- ## Checking what we've done - Let's see if DockerCoins is working! .lab[ - Check the logs of the worker: ```bash stern worker ``` - Look at the resources that were created: ```bash kubectl get all ``` ] There are *many* issues to fix! .debug[[k8s/helm-create-better-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-better-chart.md)] --- ## Can't pull image - It looks like our images can't be found .lab[ - Use `kubectl describe` on any of the pods in error ] - We're trying to pull `rng:1.16.0` instead of `rng:v0.1`! - Where does that `1.16.0` tag come from? .debug[[k8s/helm-create-better-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-better-chart.md)] --- ## Inspecting our template - Let's look at the `templates/` directory (and try to find the one generating the Deployment resource) .lab[ - Show the structure of the `helmcoins` chart that Helm generated: ```bash tree helmcoins ``` - Check the file `helmcoins/templates/deployment.yaml` - Look for the `image:` parameter ] *The image tag references `{{ .Chart.AppVersion }}`. Where does that come from?* .debug[[k8s/helm-create-better-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-better-chart.md)] --- ## The `.Chart` variable - `.Chart` is a map corresponding to the values in `Chart.yaml` - Let's look for `AppVersion` there! .lab[ - Check the file `helmcoins/Chart.yaml` - Look for the `appVersion:` parameter ] (Yes, the case is different between the template and the Chart file.) .debug[[k8s/helm-create-better-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-better-chart.md)] --- ## Using the correct tags - If we change `AppVersion` to `v0.1`, it will change for *all* deployments (including redis) - Instead, let's change the *template* to use `{{ .Values.image.tag }}` (to match what we've specified in our values YAML files) .lab[ - Edit `helmcoins/templates/deployment.yaml` - Replace `{{ .Chart.AppVersion }}` with `{{ .Values.image.tag }}` ] .debug[[k8s/helm-create-better-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-better-chart.md)] --- ## Upgrading to use the new template - Technically, we just made a new version of the *chart* - To use the new template, we need to *upgrade* the release to use that chart .lab[ - Upgrade all components: ```bash for COMPONENT in hasher redis rng webui worker; do helm upgrade $COMPONENT helmcoins done ``` - Check how our pods are doing: ```bash kubectl get pods ``` ] We should see all pods "Running". But ... not all of them are READY. .debug[[k8s/helm-create-better-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-better-chart.md)] --- ## Troubleshooting readiness - `hasher`, `rng`, `webui` should show up as `1/1 READY` - But `redis` and `worker` should show up as `0/1 READY` - Why? .debug[[k8s/helm-create-better-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-better-chart.md)] --- ## Troubleshooting pods - The easiest way to troubleshoot pods is to look at *events* - We can look at all the events on the cluster (with `kubectl get events`) - Or we can use `kubectl describe` on the objects that have problems (`kubectl describe` will retrieve the events related to the object) .lab[ - Check the events for the redis pods: ```bash kubectl describe pod -l app.kubernetes.io/name=redis ``` ] It's failing both its liveness and readiness probes! .debug[[k8s/helm-create-better-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-better-chart.md)] --- ## Healthchecks - The default chart defines healthchecks doing HTTP requests on port 80 - That won't work for redis and worker (redis is not HTTP, and not on port 80; worker doesn't even listen) -- - We could remove or comment out the healthchecks - We could also make them conditional - This sounds more interesting, let's do that! .debug[[k8s/helm-create-better-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-better-chart.md)] --- ## Conditionals - We need to enclose the healthcheck block with: `{{ if false }}` at the beginning (we can change the condition later) `{{ end }}` at the end .lab[ - Edit `helmcoins/templates/deployment.yaml` - Add `{{ if false }}` on the line before `livenessProbe` - Add `{{ end }}` after the `readinessProbe` section (see next slide for details) ] .debug[[k8s/helm-create-better-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-better-chart.md)] --- This is what the new YAML should look like (added lines in yellow): ```yaml ports: - name: http containerPort: 80 protocol: TCP `{{ if false }}` livenessProbe: httpGet: path: / port: http readinessProbe: httpGet: path: / port: http `{{ end }}` resources: {{- toYaml .Values.resources | nindent 12 }} ``` .debug[[k8s/helm-create-better-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-better-chart.md)] --- ## Testing the new chart - We need to upgrade all the services again to use the new chart .lab[ - Upgrade all components: ```bash for COMPONENT in hasher redis rng webui worker; do helm upgrade $COMPONENT helmcoins done ``` - Check how our pods are doing: ```bash kubectl get pods ``` ] Everything should now be running! .debug[[k8s/helm-create-better-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-better-chart.md)] --- ## What's next? - Is this working now? .lab[ - Let's check the logs of the worker: ```bash stern worker ``` ] This error might look familiar ... The worker can't resolve `redis`. Typically, that error means that the `redis` service doesn't exist. .debug[[k8s/helm-create-better-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-better-chart.md)] --- ## Checking services - What about the services created by our chart? .lab[ - Check the list of services: ```bash kubectl get services ``` ] They are named `COMPONENT-helmcoins` instead of just `COMPONENT`. We need to change that! .debug[[k8s/helm-create-better-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-better-chart.md)] --- ## Where do the service names come from? - Look at the YAML template used for the services - It should be using `{{ include "helmcoins.fullname" }}` - `include` indicates a *template block* defined somewhere else .lab[ - Find where that `fullname` thing is defined: ```bash grep define.*fullname helmcoins/templates/* ``` ] It should be in `_helpers.tpl`. We can look at the definition, but it's fairly complex ... .debug[[k8s/helm-create-better-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-better-chart.md)] --- ## Changing service names - Instead of that `{{ include }}` tag, let's use the name of the release - The name of the release is available as `{{ .Release.Name }}` .lab[ - Edit `helmcoins/templates/service.yaml` - Replace the service name with `{{ .Release.Name }}` - Upgrade all the releases to use the new chart - Confirm that the services now have the right names ] .debug[[k8s/helm-create-better-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-better-chart.md)] --- ## Is it working now? - If we look at the worker logs, it appears that the worker is still stuck - What could be happening? -- - The redis service is not on port 80! - Let's see how the port number is set - We need to look at both the *deployment* template and the *service* template .debug[[k8s/helm-create-better-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-better-chart.md)] --- ## Service template - In the service template, we have the following section: ```yaml ports: - port: {{ .Values.service.port }} targetPort: http protocol: TCP name: http ``` - `port` is the port on which the service is "listening" (i.e. to which our code needs to connect) - `targetPort` is the port on which the pods are listening - The `name` is not important (it's OK if it's `http` even for non-HTTP traffic) .debug[[k8s/helm-create-better-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-better-chart.md)] --- ## Setting the redis port - Let's add a `service.port` value to the redis release .lab[ - Edit `redis.yaml` to add: ```yaml service: port: 6379 ``` - Apply the new values file: ```bash helm upgrade redis helmcoins --values=redis.yaml ``` ] .debug[[k8s/helm-create-better-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-better-chart.md)] --- ## Deployment template - If we look at the deployment template, we see this section: ```yaml ports: - name: http containerPort: 80 protocol: TCP ``` - The container port is hard-coded to 80 - We'll change it to use the port number specified in the values .debug[[k8s/helm-create-better-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-better-chart.md)] --- ## Changing the deployment template .lab[ - Edit `helmcoins/templates/deployment.yaml` - The line with `containerPort` should be: ```yaml containerPort: {{ .Values.service.port }} ``` ] .debug[[k8s/helm-create-better-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-better-chart.md)] --- ## Apply changes - Re-run the for loop to execute `helm upgrade` one more time - Check the worker logs - This time, it should be working! .debug[[k8s/helm-create-better-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-better-chart.md)] --- ## Extra steps - We don't need to create a service for the worker - We can put the whole service block in a conditional (this will require additional changes in other files referencing the service) - We can set the webui to be a NodePort service - We can change the number of workers with `replicaCount` - And much more! ??? :EN:- Writing better Helm charts for app components :FR:- Écriture de *charts* composant par composant .debug[[k8s/helm-create-better-chart.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-create-better-chart.md)] --- class: pic .interstitial[] --- name: toc-charts-using-other-charts class: title Charts using other charts .nav[ [Previous part](#toc-creating-better-helm-charts) | [Back to table of contents](#toc-part-2) | [Next part](#toc-helm-and-invalid-values) ] .debug[(automatically generated title slide)] --- # Charts using other charts - Helm charts can have *dependencies* on other charts - These dependencies will help us to share or reuse components (so that we write and maintain less manifests, less templates, less code!) - As an example, we will use a community chart for Redis - This will help people who write charts, and people who use them - ... And potentially remove a lot of code! ✌️ .debug[[k8s/helm-dependencies.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-dependencies.md)] --- ## Redis in DockerCoins - In the DockerCoins demo app, we have 5 components: - 2 internal webservices - 1 worker - 1 public web UI - 1 Redis data store - Every component is running some custom code, except Redis - Every component is using a custom image, except Redis (which is using the official `redis` image) - Could we use a standard chart for Redis? - Yes! Dependencies to the rescue! .debug[[k8s/helm-dependencies.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-dependencies.md)] --- ## Adding our dependency - First, we will add the dependency to the `Chart.yaml` file - Then, we will ask Helm to download that dependency - We will also *lock* the dependency (lock it to a specific version, to ensure reproducibility) .debug[[k8s/helm-dependencies.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-dependencies.md)] --- ## Declaring the dependency - First, let's edit `Chart.yaml` .lab[ - In `Chart.yaml`, fill the `dependencies` section: ```yaml dependencies: - name: redis version: 11.0.5 repository: https://charts.bitnami.com/bitnami condition: redis.enabled ``` ] Where do that `repository` and `version` come from? We're assuming here that we did our research, or that our resident Helm expert advised us to use Bitnami's Redis chart. .debug[[k8s/helm-dependencies.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-dependencies.md)] --- ## Conditions - The `condition` field gives us a way to enable/disable the dependency: ```yaml conditions: redis.enabled ``` - Here, we can disable Redis with the Helm flag `--set redis.enabled=false` (or set that value in a `values.yaml` file) - Of course, this is mostly useful for *optional* dependencies (otherwise, the app ends up being broken since it'll miss a component) .debug[[k8s/helm-dependencies.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-dependencies.md)] --- ## Lock & Load! - After adding the dependency, we ask Helm to pin an download it .lab[ - Ask Helm: ```bash helm dependency update ``` (Or `helm dep up`) ] - This wil create `Chart.lock` and fetch the dependency .debug[[k8s/helm-dependencies.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-dependencies.md)] --- ## What's `Chart.lock`? - This is a common pattern with dependencies (see also: `Gemfile.lock`, `package.json.lock`, and many others) - This lets us define loose dependencies in `Chart.yaml` (e.g. "version 11.whatever, but below 12") - But have the exact version used in `Chart.lock` - This ensures reproducible deployments - `Chart.lock` can (should!) be added to our source tree - `Chart.lock` can (should!) regularly be updated .debug[[k8s/helm-dependencies.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-dependencies.md)] --- ## Loose dependencies - Here is an example of loose version requirement: ```yaml dependencies: - name: redis version: ">=11, <12" repository: https://charts.bitnami.com/bitnami ``` - This makes sure that we have the most recent version in the 11.x train - ... But without upgrading to version 12.x (because it might be incompatible) .debug[[k8s/helm-dependencies.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-dependencies.md)] --- ## `build` vs `update` - Helm actually offers two commands to manage dependencies: `helm dependency build` = fetch dependencies listed in `Chart.lock` `helm dependency update` = update `Chart.lock` (and run `build`) - When the dependency gets updated, we can/should: - `helm dep up` (update `Chart.lock` and fetch new chart) - test! - if everything is fine, `git add Chart.lock` and commit .debug[[k8s/helm-dependencies.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-dependencies.md)] --- ## Where are my dependencies? - Dependencies are downloaded to the `charts/` subdirectory - When they're downloaded, they stay in compressed format (`.tgz`) - Should we commit them to our code repository? - Pros: - more resilient to internet/mirror failures/decomissioning - Cons: - can add a lot of weight to the repo if charts are big or change often - this can be solved by extra tools like git-lfs .debug[[k8s/helm-dependencies.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-dependencies.md)] --- ## Dependency tuning - DockerCoins expects the `redis` Service to be named `redis` - Our Redis chart uses a different Service name by default - Service name is `{{ template "redis.fullname" . }}-master` - `redis.fullname` looks like this: ``` {{- define "redis.fullname" -}} {{- if .Values.fullnameOverride -}} {{- .Values.fullnameOverride | trunc 63 | trimSuffix "-" -}} {{- else -}} [...] {{- end }} {{- end }} ``` - How do we fix this? .debug[[k8s/helm-dependencies.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-dependencies.md)] --- ## Setting dependency variables - If we set `fullnameOverride` to `redis`: - the `{{ template ... }}` block will output `redis` - the Service name will be `redis-master` - A parent chart can set values for its dependencies - For example, in the parent's `values.yaml`: ```yaml redis: # Name of the dependency fullnameOverride: redis # Value passed to redis cluster: # Other values passed to redis enabled: false ``` - User can also set variables with `--set=` or with `--values=` .debug[[k8s/helm-dependencies.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-dependencies.md)] --- class: extra-details ## Passing templates - We can even pass template `{{ include "template.name" }}`, but warning: - need to be evaluated with the `tpl` function, on the child side - evaluated in the context of the child, with no access to parent variables <!-- FIXME this probably deserves an example, but I can't imagine one right now 😅 --> .debug[[k8s/helm-dependencies.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-dependencies.md)] --- ## Getting rid of the `-master` - Even if we set that `fullnameOverride`, the Service name will be `redis-master` - To remove the `-master` suffix, we need to edit the chart itself - To edit the Redis chart, we need to *embed* it in our own chart - We need to: - decompress the chart - adjust `Chart.yaml` accordingly .debug[[k8s/helm-dependencies.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-dependencies.md)] --- ## Embedding a dependency .lab[ - Decompress the chart: ```yaml cd charts tar zxf redis-*.tgz cd .. ``` - Edit `Chart.yaml` and update the `dependencies` section: ```yaml dependencies: - name: redis version: '*' # No need to constraint version, from local files ``` - Run `helm dep update` ] .debug[[k8s/helm-dependencies.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-dependencies.md)] --- ## Updating the dependency - Now we can edit the Service name (it should be in `charts/redis/templates/redis-master-svc.yaml`) - Then try to deploy the whole chart! .debug[[k8s/helm-dependencies.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-dependencies.md)] --- ## Embedding a dependency multiple times - What if we need multiple copies of the same subchart? (for instance, if we need two completely different Redis servers) - We can declare a dependency multiple times, and specify an `alias`: ```yaml dependencies: - name: redis version: '*' alias: querycache - name: redis version: '*' alias: celeryqueue ``` - `.Chart.Name` will be set to the `alias` .debug[[k8s/helm-dependencies.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-dependencies.md)] --- class: extra-details ## Determining if we're in a subchart - `.Chart.IsRoot` indicates if we're in the top-level chart or in a sub-chart - Useful in charts that are designed to be used standalone or as dependencies - Example: generic chart - when used standalone (`.Chart.IsRoot` is `true`), use `.Release.Name` - when used as a subchart e.g. with multiple aliases, use `.Chart.Name` .debug[[k8s/helm-dependencies.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-dependencies.md)] --- class: extra-details ## Compatibility with Helm 2 - Chart `apiVersion: v1` is the only version supported by Helm 2 - Chart v1 is also supported by Helm 3 - Use v1 if you want to be compatible with Helm 2 - Instead of `Chart.yaml`, dependencies are defined in `requirements.yaml` (and we should commit `requirements.lock` instead of `Chart.lock`) ??? :EN:- Depending on other charts :EN:- Charts within charts :FR:- Dépendances entre charts :FR:- Un chart peut en cacher un autre .debug[[k8s/helm-dependencies.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-dependencies.md)] --- class: pic .interstitial[] --- name: toc-helm-and-invalid-values class: title Helm and invalid values .nav[ [Previous part](#toc-charts-using-other-charts) | [Back to table of contents](#toc-part-2) | [Next part](#toc-helm-secrets) ] .debug[(automatically generated title slide)] --- # Helm and invalid values - A lot of Helm charts let us specify an image tag like this: ```bash helm install ... --set image.tag=v1.0 ``` - What happens if we make a small mistake, like this: ```bash helm install ... --set imagetag=v1.0 ``` - Or even, like this: ```bash helm install ... --set image=v1.0 ``` 🤔 .debug[[k8s/helm-values-schema-validation.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-values-schema-validation.md)] --- ## Making mistakes - In the first case: - we set `imagetag=v1.0` instead of `image.tag=v1.0` - Helm will ignore that value (if it's not used anywhere in templates) - the chart is deployed with the default value instead - In the second case: - we set `image=v1.0` instead of `image.tag=v1.0` - `image` will be a string instead of an object - Helm will *probably* fail when trying to evaluate `image.tag` .debug[[k8s/helm-values-schema-validation.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-values-schema-validation.md)] --- ## Preventing mistakes - To prevent the first mistake, we need to tell Helm: *"let me know if any additional (unknown) value was set!"* - To prevent the second mistake, we need to tell Helm: *"`image` should be an object, and `image.tag` should be a string!"* - We can do this with *values schema validation* .debug[[k8s/helm-values-schema-validation.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-values-schema-validation.md)] --- ## Helm values schema validation - We can write a spec representing the possible values accepted by the chart - Helm will check the validity of the values before trying to install/upgrade - If it finds problems, it will stop immediately - The spec uses [JSON Schema](https://json-schema.org/): *JSON Schema is a vocabulary that allows you to annotate and validate JSON documents.* - JSON Schema is designed for JSON, but can easily work with YAML too (or any language with `map|dict|associativearray` and `list|array|sequence|tuple`) .debug[[k8s/helm-values-schema-validation.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-values-schema-validation.md)] --- ## In practice - We need to put the JSON Schema spec in a file called `values.schema.json` (at the root of our chart; right next to `values.yaml` etc.) - The file is optional - We don't need to register or declare it in `Chart.yaml` or anywhere - Let's write a schema that will verify that ... - `image.repository` is an official image (string without slashes or dots) - `image.pullPolicy` can only be `Always`, `Never`, `IfNotPresent` .debug[[k8s/helm-values-schema-validation.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-values-schema-validation.md)] --- ## `values.schema.json` ```json { "$schema": "http://json-schema.org/schema#", "type": "object", "properties": { "image": { "type": "object", "properties": { "repository": { "type": "string", "pattern": "^[a-z0-9-_]+$" }, "pullPolicy": { "type": "string", "pattern": "^(Always|Never|IfNotPresent)$" } } } } } ``` .debug[[k8s/helm-values-schema-validation.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-values-schema-validation.md)] --- ## Testing our schema - Let's try to install a couple releases with that schema! .lab[ - Try an invalid `pullPolicy`: ```bash helm install broken --set image.pullPolicy=ShallNotPass ``` - Try an invalid value: ```bash helm install should-break --set ImAgeTAg=toto ``` ] - The first one fails, but the second one still passes ... - Why? .debug[[k8s/helm-values-schema-validation.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-values-schema-validation.md)] --- ## Bailing out on unkown properties - We told Helm what properties (values) were valid - We didn't say what to do about additional (unknown) properties! - We can fix that with `"additionalProperties": false` .lab[ - Edit `values.schema.json` to add `"additionalProperties": false` ```json { "$schema": "http://json-schema.org/schema#", "type": "object", "additionalProperties": false, "properties": { ... ``` ] .debug[[k8s/helm-values-schema-validation.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-values-schema-validation.md)] --- ## Testing with unknown properties .lab[ - Try to pass an extra property: ```bash helm install should-break --set ImAgeTAg=toto ``` - Try to pass an extra nested property: ```bash helm install does-it-work --set image.hello=world ``` ] The first command should break. The second will not. `"additionalProperties": false` needs to be specified at each level. ??? :EN:- Helm schema validation :FR:- Validation de schema Helm .debug[[k8s/helm-values-schema-validation.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-values-schema-validation.md)] --- class: pic .interstitial[] --- name: toc-helm-secrets class: title Helm secrets .nav[ [Previous part](#toc-helm-and-invalid-values) | [Back to table of contents](#toc-part-2) | [Next part](#toc-exercise--umbrella-charts) ] .debug[(automatically generated title slide)] --- # Helm secrets - Helm can do *rollbacks*: - to previously installed charts - to previous sets of values - How and where does it store the data needed to do that? - Let's investigate! .debug[[k8s/helm-secrets.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-secrets.md)] --- ## Adding the repo - If you haven't done it before, you need to add the repo for that chart .lab[ - Add the repo that holds the chart for the OWASP Juice Shop: ```bash helm repo add juice https://charts.securecodebox.io ``` ] .debug[[k8s/helm-secrets.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-secrets.md)] --- ## We need a release - We need to install something with Helm - Let's use the `juice/juice-shop` chart as an example .lab[ - Install a release called `orange` with the chart `juice/juice-shop`: ```bash helm upgrade orange juice/juice-shop --install ``` - Let's upgrade that release, and change a value: ```bash helm upgrade orange juice/juice-shop --set ingress.enabled=true ``` ] .debug[[k8s/helm-secrets.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-secrets.md)] --- ## Release history - Helm stores successive revisions of each release .lab[ - View the history for that release: ```bash helm history orange ``` ] Where does that come from? .debug[[k8s/helm-secrets.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-secrets.md)] --- ## Investigate - Possible options: - local filesystem (no, because history is visible from other machines) - persistent volumes (no, Helm works even without them) - ConfigMaps, Secrets? .lab[ - Look for ConfigMaps and Secrets: ```bash kubectl get configmaps,secrets ``` ] -- We should see a number of secrets with TYPE `helm.sh/release.v1`. .debug[[k8s/helm-secrets.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-secrets.md)] --- ## Unpacking a secret - Let's find out what is in these Helm secrets .lab[ - Examine the secret corresponding to the second release of `orange`: ```bash kubectl describe secret sh.helm.release.v1.orange.v2 ``` (`v1` is the secret format; `v2` means revision 2 of the `orange` release) ] There is a key named `release`. .debug[[k8s/helm-secrets.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-secrets.md)] --- ## Unpacking the release data - Let's see what's in this `release` thing! .lab[ - Dump the secret: ```bash kubectl get secret sh.helm.release.v1.orange.v2 \ -o go-template='{{ .data.release }}' ``` ] Secrets are encoded in base64. We need to decode that! .debug[[k8s/helm-secrets.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-secrets.md)] --- ## Decoding base64 - We can pipe the output through `base64 -d` or use go-template's `base64decode` .lab[ - Decode the secret: ```bash kubectl get secret sh.helm.release.v1.orange.v2 \ -o go-template='{{ .data.release | base64decode }}' ``` ] -- ... Wait, this *still* looks like base64. What's going on? -- Let's try one more round of decoding! .debug[[k8s/helm-secrets.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-secrets.md)] --- ## Decoding harder - Just add one more base64 decode filter .lab[ - Decode it twice: ```bash kubectl get secret sh.helm.release.v1.orange.v2 \ -o go-template='{{ .data.release | base64decode | base64decode }}' ``` ] -- ... OK, that was *a lot* of binary data. What should we do with it? .debug[[k8s/helm-secrets.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-secrets.md)] --- ## Guessing data type - We could use `file` to figure out the data type .lab[ - Pipe the decoded release through `file -`: ```bash kubectl get secret sh.helm.release.v1.orange.v2 \ -o go-template='{{ .data.release | base64decode | base64decode }}' \ | file - ``` ] -- Gzipped data! It can be decoded with `gunzip -c`. .debug[[k8s/helm-secrets.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-secrets.md)] --- ## Uncompressing the data - Let's uncompress the data and save it to a file .lab[ - Rerun the previous command, but with `| gunzip -c > release-info` : ```bash kubectl get secret sh.helm.release.v1.orange.v2 \ -o go-template='{{ .data.release | base64decode | base64decode }}' \ | gunzip -c > release-info ``` - Look at `release-info`: ```bash cat release-info ``` ] -- It's a bundle of ~~YAML~~ JSON. .debug[[k8s/helm-secrets.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-secrets.md)] --- ## Looking at the JSON If we inspect that JSON (e.g. with `jq keys release-info`), we see: - `chart` (contains the entire chart used for that release) - `config` (contains the values that we've set) - `info` (date of deployment, status messages) - `manifest` (YAML generated from the templates) - `name` (name of the release, so `orange`) - `namespace` (namespace where we deployed the release) - `version` (revision number within that release; starts at 1) The chart is in a structured format, but it's entirely captured in this JSON. .debug[[k8s/helm-secrets.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-secrets.md)] --- ## Conclusions - Helm stores each release information in a Secret in the namespace of the release - The secret is JSON object (gzipped and encoded in base64) - It contains the manifests generated for that release - ... And everything needed to rebuild these manifests (including the full source of the chart, and the values used) - This allows arbitrary rollbacks, as well as tweaking values even without having access to the source of the chart (or the chart repo) used for deployment ??? :EN:- Deep dive into Helm internals :FR:- Fonctionnement interne de Helm .debug[[k8s/helm-secrets.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helm-secrets.md)] --- class: pic .interstitial[] --- name: toc-exercise--umbrella-charts class: title Exercise — Umbrella Charts .nav[ [Previous part](#toc-helm-secrets) | [Back to table of contents](#toc-part-2) | [Next part](#toc-managing-our-stack-with-helmfile) ] .debug[(automatically generated title slide)] --- # Exercise — Umbrella Charts - We want to deploy dockercoins with a single Helm chart - That chart will reuse the "generic chart" created previously - This will require expressing dependencies, and using the `alias` keyword - It will also require minor changes in the templates .debug[[exercises/helm-umbrella-chart-details.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/exercises/helm-umbrella-chart-details.md)] --- ## Goal - We want to be able to install a copy of dockercoins with: ```bash helm install dockercoins ./umbrella-chart ``` - It should leverage the generic chart created earlier (and instanciate it five times, one time per component of dockercoins) - The values YAML files created earlier should be merged in a single one .debug[[exercises/helm-umbrella-chart-details.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/exercises/helm-umbrella-chart-details.md)] --- ## Bonus - We want to replace our redis component with a better one - We're going to use Bitnami's redis chart (find it on the Artifact Hub) - However, a lot of adjustments will be required! (check following slides if you need hints) .debug[[exercises/helm-umbrella-chart-details.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/exercises/helm-umbrella-chart-details.md)] --- ## Hints (1/2) - We will probably have to disable persistence - by default, the chart enables persistence - this works only if we have a default StorageClass - this can be disabled by setting a value - We will also have to disable authentication - by default, the chart generates a password for Redis - the dockercoins code doesn't use one - this can also be changed by setting a value .debug[[exercises/helm-umbrella-chart-details.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/exercises/helm-umbrella-chart-details.md)] --- ## Hints (2/2) - The dockercoins code connects to `redis` - The chart generates different service names - Option 1: - vendor the chart in our umbrella chart - change the service name in the chart - Option 2: - add a Service of type ExternalName - it will be a DNS alias from `redis` to `redis-whatever.NAMESPACE.svc.cluster.local` - for extra points, make the domain configurable .debug[[exercises/helm-umbrella-chart-details.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/exercises/helm-umbrella-chart-details.md)] --- class: pic .interstitial[] --- name: toc-managing-our-stack-with-helmfile class: title Managing our stack with `helmfile` .nav[ [Previous part](#toc-exercise--umbrella-charts) | [Back to table of contents](#toc-part-3) | [Next part](#toc-ytt) ] .debug[(automatically generated title slide)] --- # Managing our stack with `helmfile` - We've installed a few things with Helm - And others with raw YAML manifests - Perhaps you've used Kustomize sometimes - How can we automate all this? Make it reproducible? .debug[[k8s/helmfile.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helmfile.md)] --- ## Requirements - We want something that is *idempotent* = running it 1, 2, 3 times, should only install the stack once - We want something that handles udpates = modifying / reconfiguring without restarting from scratch - We want something that is configurable = with e.g. configuration files, environment variables... - We want something that can handle *partial removals* = ability to remove one element without affecting the rest - Inspiration: Terraform, Docker Compose... .debug[[k8s/helmfile.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helmfile.md)] --- ## Shell scripts? ✅ Idempotent, thanks to `kubectl apply -f`, `helm upgrade --install` ✅ Handles updates (edit script, re-run) ✅ Configurable ❌ Partial removals If we remove an element from our script, it won't be uninstalled automatically. .debug[[k8s/helmfile.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helmfile.md)] --- ## Umbrella chart? Helm chart with dependencies on other charts. ✅ Idempotent ✅ Handles updates ✅ Configurable (with Helm values: YAML files and `--set`) ✅ Partial removals ❌ Complex (requires to learn advanced Helm features) ❌ Requires everything to be a Helm chart (adds (lots of) boilerplate) .debug[[k8s/helmfile.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helmfile.md)] --- ## Helmfile https://github.com/helmfile/helmfile ✅ Idempotent ✅ Handles updates ✅ Configurable (with values files, environment variables, and more) ✅ Partial removals ✅ Fairly easy to get started 🐙 Sometimes feels like summoning unspeakable powers / staring down the abyss .debug[[k8s/helmfile.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helmfile.md)] --- ## What `helmfile` can install - Helm charts from remote Helm repositories - Helm charts from remote git repositories - Helm charts from local directories - Kustomizations - Directories with raw YAML manifests .debug[[k8s/helmfile.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helmfile.md)] --- ## How `helmfile` works - Everything is defined in a main `helmfile.yaml` - That file defines: - `repositories` (remote Helm repositories) - `releases` (things to install: Charts, YAML...) - `environments` (optional: to specialize prod vs staging vs ...) - Helm-style values file can be loaded in `enviroments` - These values can then be used in the rest of the Helmfile - Examples: [install essentials on a cluster][helmfile-ex-1], [run a Bento stack][helmfile-ex-2] [helmfile-ex-1]: https://github.com/jpetazzo/beyond-load-balancers/blob/main/helmfile.yaml [helmfile-ex-2]: https://github.com/jpetazzo/beyond-load-balancers/blob/main/bento/helmfile.yaml .debug[[k8s/helmfile.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helmfile.md)] --- ## `helmfile` commands - `helmfile init` (optional; downloads plugins if needed) - `helmfile apply` (updates all releases that have changed) - `helmfile sync` (updates all releases even if they haven't changed) - `helmfile destroy` (guess!) .debug[[k8s/helmfile.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helmfile.md)] --- ## Helmfile tips As seen in [this example](https://github.com/jpetazzo/beyond-load-balancers/blob/main/bento/helmfile.yaml#L21): - variables can be used to simplify the file - configuration values and secrets can be loaded from external sources (Kubernetes Secrets, Vault... See [vals] for details) - current namespace isn't exposed by default - there's often more than one way to do it! (this particular section could be improved by using Bento `${...}`) [vals]: https://github.com/helmfile/vals ??? ## 🏗️ Let's build something! - Write a helmfile (or two) to set up today's entire stack on a brand new cluster! - Suggestion: - one helmfile for singleton, cluster components <br/> (All our operators: Prometheus, Grafana, KEDA, CNPG, RabbitMQ Operator) - one helmfile for the application stack <br/> (Bento, PostgreSQL cluster, RabbitMQ) .debug[[k8s/helmfile.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/helmfile.md)] --- class: pic .interstitial[] --- name: toc-ytt class: title YTT .nav[ [Previous part](#toc-managing-our-stack-with-helmfile) | [Back to table of contents](#toc-part-3) | [Next part](#toc-git-based-workflows-gitops) ] .debug[(automatically generated title slide)] --- # YTT - YAML Templating Tool - Part of [Carvel] (a set of tools for Kubernetes application building, configuration, and deployment) - Can be used for any YAML (Kubernetes, Compose, CI pipelines...) [Carvel]: https://carvel.dev/ .debug[[k8s/ytt.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/ytt.md)] --- ## Features - Manipulate data structures, not text (≠ Helm) - Deterministic, hermetic execution - Define variables, blocks, functions - Write code in Starlark (dialect of Python) - Define and override values (Helm-style) - Patch resources arbitrarily (Kustomize-style) .debug[[k8s/ytt.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/ytt.md)] --- ## Getting started - Install `ytt` ([binary download][download]) - Start with one (or multiple) Kubernetes YAML files *(without comments; no `#` allowed at this point!)* - `ytt -f one.yaml -f two.yaml | kubectl apply -f-` - `ytt -f. | kubectl apply -f-` [download]: https://github.com/vmware-tanzu/carvel-ytt/releases/latest .debug[[k8s/ytt.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/ytt.md)] --- ## No comments?!? - Replace `#` with `#!` - `#@` is used by ytt - It's a kind of template tag, for instance: ```yaml #! This is a comment #@ a = 42 #@ b = "*" a: #@ a b: #@ b operation: multiply result: #@ a*b ``` - `#@` at the beginning of a line = instruction - `#@` somewhere else = value .debug[[k8s/ytt.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/ytt.md)] --- ## Building strings - Concatenation: ```yaml #@ repository = "dockercoins" #@ tag = "v0.1" containers: - name: worker image: #@ repository + "/worker:" + tag ``` - Formatting: ```yaml #@ repository = "dockercoins" #@ tag = "v0.1" containers: - name: worker image: #@ "{}/worker:{}".format(repository, tag) ``` .debug[[k8s/ytt.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/ytt.md)] --- ## Defining functions - Reusable functions can be written in Starlark (=Python) - Blocks (`def`, `if`, `for`...) must be terminated with `#@ end` - Example: ```yaml #@ def image(component, repository="dockercoins", tag="v0.1"): #@ return "{}/{}:{}".format(repository, component, tag) #@ end containers: - name: worker image: #@ image("worker") - name: hasher image: #@ image("hasher") ``` .debug[[k8s/ytt.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/ytt.md)] --- ## Structured data - Functions can return complex types - Example: defining a common set of labels ```yaml #@ name = "worker" #@ def labels(component): #@ return { #@ "app": component, #@ "container.training/generated-by": "ytt", #@ } #@ end kind: Pod apiVersion: v1 metadata: name: #@ name labels: #@ labels(name) ``` .debug[[k8s/ytt.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/ytt.md)] --- ## YAML functions - Function body can also be straight YAML: ```yaml #@ name = "worker" #@ def labels(component): app: #@ component container.training/generated-by: ytt #@ end kind: Pod apiVersion: v1 metadata: name: #@ name labels: #@ labels(name) ``` - The return type of the function is then a [YAML fragment][fragment] [fragment]: https://carvel.dev/ytt/docs/v0.41.0/ .debug[[k8s/ytt.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/ytt.md)] --- ## More YAML functions - We can load library functions: ```yaml #@ load("@ytt:sha256", "sha256") ``` - This is (sort of) equivalent fo `from ytt.sha256 import sha256` - Functions can contain a mix of code and YAML fragment: ```yaml #@ load("@ytt:sha256", "sha256") #@ def annotations(): #@ author = "Jérôme Petazzoni" author: #@ author author_hash: #@ sha256.sum(author)[:8] #@ end annotations: #@ annotations() ``` .debug[[k8s/ytt.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/ytt.md)] --- ## Data values - We can define a *schema* in a separate file: ```yaml #@data/values-schema --- #! there must be a "---" here! repository: dockercoins tag: v0.1 ``` - This defines the data values (=customizable parameters), as well as their *types* and *default values* - Technically, `#@data/values-schema` is an annotation, and it applies to a YAML document; so the following element must be a YAML document - This is conceptually similar to Helm's *values* file <br/> (but with type enforcement as a bonus) .debug[[k8s/ytt.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/ytt.md)] --- ## Using data values - Requires loading `@ytt:data` - Values are then available in `data.values` - Example: ```yaml #@ load("@ytt:data", "data") #@ def image(component): #@ return "{}/{}:{}".format(data.values.repository, component, data.values.tag) #@ end #@ name = "worker" containers: - name: #@ name image: #@ image(name) ``` .debug[[k8s/ytt.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/ytt.md)] --- ## Overriding data values - There are many ways to set and override data values: - plain YAML files - data value overlays - environment variables - command-line flags - Precedence of the different methods is defined in the [docs][data-values-merge-order] [data-values-merge-order]: https://carvel.dev/ytt/docs/v0.41.0/ytt-data-values/#data-values-merge-order .debug[[k8s/ytt.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/ytt.md)] --- ## Values in plain YAML files - Content of `values.yaml`: ```yaml tag: latest ``` - Values get merged with `--data-values-file`: ```bash ytt -f config/ --data-values-file values.yaml ``` - Multiple files can be specified - These files can also be URLs! .debug[[k8s/ytt.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/ytt.md)] --- ## Data value overlay - Content of `values.yaml`: ```yaml #@data/values --- #! must have --- here tag: latest ``` - Values get merged by being specified like "normal" files: ```bash ytt -f config/ -f values.yaml ``` - Multiple files can be specified .debug[[k8s/ytt.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/ytt.md)] --- ## Set a value with a flag - Set a string value: ```bash ytt -f config/ --data-value tag=latest ``` - Set a YAML value (useful to parse it as e.g. integer, boolean...): ```bash ytt -f config/ --data-value-yaml replicas=10 ``` - Read a string value from a file: ```bash ytt -f config/ --data-value-file ca_cert=cert.pem ``` .debug[[k8s/ytt.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/ytt.md)] --- ## Set values from environment variables - Set environment variables with a prefix: ```bash export VAL_tag=latest export VAL_repository=ghcr.io/dockercoins ``` - Use the variables as strings: ```bash ytt -f config/ --data-values-env VAL ``` - Or parse them as YAML: ```bash ytt -f config/ --data-values-env-yaml VAL ``` .debug[[k8s/ytt.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/ytt.md)] --- ## Lines starting with `#@` - This generates an empty document: ```yaml #@ def hello(): hello: world #@ end #@ hello() ``` - Do this instead: ```yaml #@ def hello(): hello: world #@ end --- #@ hello() ``` .debug[[k8s/ytt.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/ytt.md)] --- ## Generating multiple documents, take 1 - This won't work: ```yaml #@ def app(): kind: Deployment apiVersion: apps/v1 --- #! separate from next document kind: Service apiVersion: v1 #@ end --- #@ app() ``` .debug[[k8s/ytt.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/ytt.md)] --- ## Generating multiple documents, take 2 - This won't work either: ```yaml #@ def app(): --- #! the initial separator indicates "this is a Document Set" kind: Deployment apiVersion: apps/v1 --- #! separate from next document kind: Service apiVersion: v1 #@ end --- #@ app() ``` .debug[[k8s/ytt.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/ytt.md)] --- ## Generating multiple documents, take 3 - We must use the `template` module: ```yaml #@ load("@ytt:template", "template") #@ def app(): --- #! the initial separator indicates "this is a Document Set" kind: Deployment apiVersion: apps/v1 --- #! separate from next document kind: Service apiVersion: v1 #@ end --- #@ template.replace(app()) ``` - `template.replace(...)` is the only way (?) to replace one element with many .debug[[k8s/ytt.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/ytt.md)] --- ## Libraries - A reusable ytt configuration can be transformed into a library - Put it in a subdirectory named `_ytt_lib/whatever`, then: ```yaml #@ load("@ytt:library", "library") #@ load("@ytt:template", "template") #@ whatever = library.get("whatever") #@ my_values = {"tag": "latest", "registry": "..."} #@ output = whatever.with_data_values(my_values).eval() --- #@ template.replace(output) ``` - The `with_data_values()` step is optional, but useful to "configure" the library - Note the whole combo: ```yaml template.replace(library.get("...").with_data_values(...).eval()) ``` .debug[[k8s/ytt.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/ytt.md)] --- ## Overlays - Powerful, but complex, but powerful! 💥 - Define transformations that are applied after generating the whole document set - General idea: - select YAML nodes to be transformed with an `#@overlay/match` decorator - write a YAML snippet with the modifications to be applied <br/> (a bit like a strategic merge patch) .debug[[k8s/ytt.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/ytt.md)] --- ## Example ```yaml #@ load("@ytt:overlay", "overlay") #@ selector = {"kind": "Deployment", "metadata": {"name": "worker"}} #@overlay/match by=overlay.subset(selector) --- spec: replicas: 10 ``` - By default, `#@overlay/match` must find *exactly* one match (that can be changed by specifying `expects=...`, `missing_ok=True`... see [docs][docs-ytt-overlaymatch]) - By default, the specified fields (here, `spec.replicas`) must exist (that can also be changed by annotating the optional fields) [docs-ytt-overlaymatch]: https://carvel.dev/ytt/docs/v0.41.0/lang-ref-ytt-overlay/#overlaymatch .debug[[k8s/ytt.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/ytt.md)] --- ## Matching using a YAML document ```yaml #@ load("@ytt:overlay", "overlay") #@ def match(): kind: Deployment metadata: name: worker #@ end #@overlay/match by=overlay.subset(match()) --- spec: replicas: 10 ``` - This is equivalent to the subset match of the previous slide - It will find YAML nodes having all the listed fields .debug[[k8s/ytt.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/ytt.md)] --- ## Removing a field ```yaml #@ load("@ytt:overlay", "overlay") #@ def match(): kind: Deployment metadata: name: worker #@ end #@overlay/match by=overlay.subset(match()) --- spec: #@overlay/remove replicas: ``` - This would remove the `replicas:` field from a specific Deployment spec - This could be used e.g. when enabling autoscaling .debug[[k8s/ytt.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/ytt.md)] --- ## Selecting multiple nodes ```yaml #@ load("@ytt:overlay", "overlay") #@ def match(): kind: Deployment #@ end #@overlay/match by=overlay.subset(match()), expects="1+" --- spec: #@overlay/remove replicas: ``` - This would match all Deployments <br/> (assuming that *at least one* exists) - It would remove the `replicas:` field from their spec <br/> (the field must exist!) .debug[[k8s/ytt.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/ytt.md)] --- ## Adding a field ```yaml #@ load("@ytt:overlay", "overlay") #@overlay/match by=overlay.all, expects="1+" --- metadata: #@overlay/match missing_ok=True annotations: #@overlay/match expects=0 rainbow: 🌈 ``` - `#@overlay/match missing_ok=True` <br/> *will match whether our resources already have annotations or not* - `#@overlay/match expects=0` <br/> *will only match if the `rainbow` annotation doesn't exist* <br/> *(to make sure that we don't override/replace an existing annotation)* .debug[[k8s/ytt.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/ytt.md)] --- ## Overlays vs data values - The documentation has a [detailed discussion][data-values-vs-overlays] about this question - In short: - values = for parameters that are exposed to the user - overlays = for arbitrary extra modifications - Values are easier to use (use them when possible!) - Fallback to overlays when values don't expose what you need (keeping in mind that overlays are harder to write/understand/maintain) [data-values-vs-overlays]: https://carvel.dev/ytt/docs/v0.41.0/data-values-vs-overlays/ .debug[[k8s/ytt.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/ytt.md)] --- ## Gotchas - Reminder: put your `#@` at the right place! ```yaml #! This will generate "hello, world!" --- #@ "{}, {}!".format("hello", "world") ``` ```yaml #! But this will generate an empty document --- #@ "{}, {}!".format("hello", "world") ``` - Also, don't use YAML anchors (`*foo` and `&foo`) - They don't mix well with ytt - Remember to use `template.render(...)` when generating multiple nodes (or to update lists or arrays without replacing them entirely) .debug[[k8s/ytt.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/ytt.md)] --- ## Next steps with ytt - Read this documentation page about [injecting secrets][secrets] - Check the [FAQ], it gives some insights about what's possible with ytt - Exercise idea: write an overlay that will find all ConfigMaps mounted in Pods... ...and annotate the Pod with a hash of the ConfigMap [FAQ]: https://carvel.dev/ytt/docs/v0.41.0/faq/ [secrets]: https://carvel.dev/ytt/docs/v0.41.0/injecting-secrets/ ??? :EN:- YTT :FR:- YTT .debug[[k8s/ytt.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/ytt.md)] --- class: pic .interstitial[] --- name: toc-git-based-workflows-gitops class: title Git-based workflows (GitOps) .nav[ [Previous part](#toc-ytt) | [Back to table of contents](#toc-part-3) | [Next part](#toc-fluxcd) ] .debug[(automatically generated title slide)] --- # Git-based workflows (GitOps) - Deploying with `kubectl` has downsides: - we don't know *who* deployed *what* and *when* - there is no audit trail (except the API server logs) - there is no easy way to undo most operations - there is no review/approval process (like for code reviews) - We have all these things for *code*, though - Can we manage cluster state like we manage our source code? .debug[[k8s/gitworkflows.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/gitworkflows.md)] --- ## Reminder: Kubernetes is *declarative* - All we do is create/change resources - These resources have a perfect YAML representation - All we do is manipulate these YAML representations (`kubectl run` generates a YAML file that gets applied) - We can store these YAML representations in a code repository - We can version that code repository and maintain it with best practices - define which branch(es) can go to qa/staging/production - control who can push to which branches - have formal review processes, pull requests, test gates... .debug[[k8s/gitworkflows.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/gitworkflows.md)] --- ## Enabling git-based workflows - There are a many tools out there to help us do that; with different approaches - "Git host centric" approach: GitHub Actions, GitLab... *the workflows/action are directly initiated by the git platform* - "Kubernetes cluster centric" approach: [ArgoCD], [FluxCD].. *controllers run on our clusters and trigger on repo updates* - This is not an exhaustive list (see also: Jenkins) - We're going to talk mostly about "Kubernetes cluster centric" approaches here [ArgoCD]: https://argoproj.github.io/cd/ [Flux]: https://fluxcd.io/ .debug[[k8s/gitworkflows.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/gitworkflows.md)] --- ## The road to production In no specific order, we need to at least: - Choose a tool - Choose a cluster / app / namespace layout <br/> (one cluster per app, different clusters for prod/staging...) - Choose a repository layout <br/> (different repositories, directories, branches per app, env, cluster...) - Choose an installation / bootstrap method - Choose how new apps / environments / versions will be deployed - Choose how new images will be built .debug[[k8s/gitworkflows.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/gitworkflows.md)] --- ## Flux vs ArgoCD (1/2) - Flux: - fancy setup with an (optional) dedicated `flux bootstrap` command <br/> (with support for specific git providers, repo creation...) - deploying an app requires multiple CRDs <br/> (Kustomization, HelmRelease, GitRepository...) - supports Helm charts, Kustomize, raw YAML - ArgoCD: - simple setup (just apply YAMLs / install Helm chart) - fewer CRDs (basic workflow can be implement with a single "Application" resource) - supports Helm charts, Jsonnet, Kustomize, raw YAML, and arbitrary plugins .debug[[k8s/gitworkflows.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/gitworkflows.md)] --- ## Flux vs ArgoCD (2/2) - Flux: - sync interval is configurable per app - no web UI out of the box - CLI relies on Kubernetes API access - CLI can easily generate custom resource manifests (with `--export`) - self-hosted (flux controllers are managed by flux itself by default) - one flux instance manages a single cluster - ArgoCD: - sync interval is configured globally - comes with a web UI - CLI can use Kubernetes API or separate API and authentication system - one ArgoCD instance can manage multiple clusters .debug[[k8s/gitworkflows.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/gitworkflows.md)] --- ## Cluster, app, namespace layout - One cluster per app, different namespaces for environments? - One cluster per environment, different namespaces for apps? - Everything on a single cluster? One cluster per combination? - Something in between: - prod cluster, database cluster, dev/staging/etc cluster - prod+db cluster per app, shared dev/staging/etc cluster - And more! Note: this decision isn't really tied to GitOps! .debug[[k8s/gitworkflows.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/gitworkflows.md)] --- ## Repository layout So many different possibilities! - Source repos - Cluster/infra repos/branches/directories - "Deployment" repos (with manifests, charts) - Different repos/branches/directories for environments 🤔 How to decide? .debug[[k8s/gitworkflows.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/gitworkflows.md)] --- ## Permissions - Different teams/companies = different repos - separate platform team → separate "infra" vs "apps" repos - teams working on different apps → different repos per app - Branches can be "protected" (`production`, `main`...) (don't need separate repos for separate environments) - Directories will typically have the same permissions - Managing directories is easier than branches - But branches are more "powerful" (cherrypicking, rebasing...) .debug[[k8s/gitworkflows.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/gitworkflows.md)] --- ## Resource hierarchy - Git-based deployments are managed by Kubernetes resources (e.g. Kustomization, HelmRelease with Flux; Application with ArgoCD) - We will call these resources "GitOps resources" - These resources need to be managed like any other Kubernetes resource (YAML manifests, Kustomizations, Helm charts) - They can be managed with Git workflows too! .debug[[k8s/gitworkflows.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/gitworkflows.md)] --- ## Cluster / infra management - How do we provision clusters? - Manual "one-shot" provisioning (CLI, web UI...) - Automation with Terraform, Ansible... - Kubernetes-driven systems (Crossplane, CAPI) - Infrastructure can also be managed with GitOps .debug[[k8s/gitworkflows.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/gitworkflows.md)] --- ## Example 1 - Managed with YAML/Charts: - core components (CNI, CSI, Ingress, logging, monitoring...) - GitOps controllers - critical application foundations (database operator, databases) - GitOps manifests - Managed with GitOps: - applications - staging databases .debug[[k8s/gitworkflows.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/gitworkflows.md)] --- ## Example 2 - Managed with YAML/Charts: - essential components (CNI, CoreDNS) - initial installation of GitOps controllers - Managed with GitOps: - upgrades of GitOps controllers - core components (CSI, Ingress, logging, monitoring...) - operators, databases - more GitOps manifests for applications! .debug[[k8s/gitworkflows.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/gitworkflows.md)] --- ## Concrete example - Source code repository (not shown here) - Infrastructure repository (shown below), single branch ``` ├── charts/ <--- could also be in separate app repos │ ├── dockercoins/ │ └── color/ ├── apps/ <--- YAML manifests for GitOps resources │ ├── dockercoins/ (might reference the "charts" above, │ ├── blue/ and/or include environment-specific │ ├── green/ manifests to create e.g. namespaces, │ ├── kube-prometheus-stack/ configmaps, secrets...) │ ├── cert-manager/ │ └── traefik/ └── clusters/ <--- per-cluster; will typically reference ├── prod/ the "apps" above, possibly extending └── dev/ or adding configuration resources too ``` ??? :EN:- GitOps :FR:- GitOps .debug[[k8s/gitworkflows.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/gitworkflows.md)] --- class: pic .interstitial[] --- name: toc-fluxcd class: title FluxCD .nav[ [Previous part](#toc-git-based-workflows-gitops) | [Back to table of contents](#toc-part-3) | [Next part](#toc-argocd) ] .debug[(automatically generated title slide)] --- # FluxCD - We're going to implement a basic GitOps workflow with Flux - Pushing to `main` will automatically deploy to the clusters - There will be two clusters (`dev` and `prod`) - The two clusters will have similar (but slightly different) workloads .debug[[k8s/flux.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/flux.md)] --- ## Repository structure This is (approximately) what we're going to do: ``` ├── charts/ <--- could also be in separate app repos │ ├── dockercoins/ │ └── color/ ├── apps/ <--- YAML manifests for GitOps resources │ ├── dockercoins/ (might reference the "charts" above, │ ├── blue/ and/or include environment-specific │ ├── green/ manifests to create e.g. namespaces, │ ├── kube-prometheus-stack/ configmaps, secrets...) │ ├── cert-manager/ │ └── traefik/ └── clusters/ <--- per-cluster; will typically reference ├── prod/ the "apps" above, possibly extending └── dev/ or adding configuration resources too ``` .debug[[k8s/flux.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/flux.md)] --- ## Resource graph <pre class="mermaid"> flowchart TD H/D["charts/dockercoins<br/>(Helm chart)"] H/C["charts/color<br/>(Helm chart)"] A/D["apps/dockercoins/flux.yaml<br/>(HelmRelease)"] A/B["apps/blue/flux.yaml<br/>(HelmRelease)"] A/G["apps/green/flux.yaml<br/>(HelmRelease)"] A/CM["apps/cert-manager/flux.yaml<br/>(HelmRelease)"] A/P["apps/kube-prometheus-stack/flux.yaml<br/>(HelmRelease + Kustomization)"] A/T["traefik/flux.yaml<br/>(HelmRelease)"] C/D["clusters/dev/kustomization.yaml<br/>(Kustomization)"] C/P["clusters/prod/kustomization.yaml<br/>(Kustomization)"] C/D --> A/B C/D --> A/D C/D --> A/G C/P --> A/D C/P --> A/G C/P --> A/T C/P --> A/CM C/P --> A/P A/D --> H/D A/B --> H/C A/G --> H/C A/P --> CHARTS & PV["apps/kube-prometheus-stack/manifests/configmap.yaml<br/>(Helm values)"] A/CM --> CHARTS A/T --> CHARTS CHARTS["Charts on external repos"] </pre> .debug[[k8s/flux.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/flux.md)] --- ## Getting ready - Let's make sure we have two clusters - It's OK to use local clusters (kind, minikube...) - We might run into resource limits, though (pay attention to `Pending` pods!) - We need to install the Flux CLI ([packages], [binaries]) - **Highly recommended:** set up CLI completion! - Of course we'll need a Git service, too (we're going to use GitHub here) [packages]: https://fluxcd.io/flux/get-started/ [binaries]: https://github.com/fluxcd/flux2/releases .debug[[k8s/flux.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/flux.md)] --- ## GitHub setup - Generate a GitHub token: https://github.com/settings/tokens/new - Give it "repo" access - This token will be used by the `flux bootstrap github` command later - It will create a repository and configure it (SSH key...) - The token can be revoked afterwards .debug[[k8s/flux.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/flux.md)] --- ## Flux bootstrap .lab[ - Let's set a few variables for convenience, and create our repository: ```bash export GITHUB_TOKEN=... export GITHUB_USER=changeme export GITHUB_REPO=alsochangeme export FLUX_CLUSTER=dev flux bootstrap github \ --owner=$GITHUB_USER \ --repository=$GITHUB_REPO \ --branch=main \ --path=./clusters/$FLUX_CLUSTER \ --personal --private=false ``` ] Problems? check next slide! .debug[[k8s/flux.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/flux.md)] --- ## What could go wrong? - `flux bootstrap` will create or update the repository on GitHub - Then it will install Flux controllers to our cluster - Then it waits for these controllers to be up and running and ready - Check pod status in `flux-system` - If pods are `Pending`, check that you have enough resources on your cluster - For testing purposes, it should be fine to lower or remove Flux `requests`! (but don't do that in production!) - If anything goes wrong, don't worry, we can just re-run the bootstrap .debug[[k8s/flux.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/flux.md)] --- class: extra-details ## Idempotence - It's OK to run that same `flux bootstrap` command multiple times! - If the repository already exists, it will re-use it (it won't destroy or empty it) - If the path `./clusters/$FLUX_CLUSTER` already exists, it will update it - It's totally fine to re-run `flux bootstrap` if something fails - It's totally fine to run it multiple times on different clusters - Or even to run it multiple times for the *same* cluster (to reinstall Flux on that cluster after a cluster wipe / reinstall) .debug[[k8s/flux.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/flux.md)] --- ## What do we get? - Let's look at what `flux bootstrap` installed on the cluster .lab[ - Look inside the `flux-system` namespace: ```bash kubectl get all --namespace flux-system ``` - Look at `kustomizations` custom resources: ```bash kubectl get kustomizations --all-namespaces ``` - See what the `flux` CLI tells us: ```bash flux get all ``` ] .debug[[k8s/flux.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/flux.md)] --- ## Deploying with GitOps - We'll need to add/edit files on the repository - We can do it by using `git clone`, local edits, `git commit`, `git push` - Or by editing online on the GitHub website .lab[ - Create a manifest; for instance `clusters/dev/flux-system/blue.yaml` - Add that manifest to `clusters/dev/kustomization.yaml` - Commit and push both changes to the repository ] .debug[[k8s/flux.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/flux.md)] --- ## Waiting for reconciliation - Compare the git hash that we pushed and the one shown with `kubectl get ` - Option 1: wait for Flux to pick up the changes in the repository (the default interval for git repositories is 1 minute, so that's fast) - Option 2: use `flux reconcile source git flux-system` (this puts an annotation on the appropriate resource, triggering an immediate check) - Option 3: set up receiver webhooks (so that git updates trigger immediate reconciliation) .debug[[k8s/flux.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/flux.md)] --- ## Checking progress - `flux logs` - `kubectl get gitrepositories --all-namespaces` - `kubectl get kustomizations --all-namespaces` .debug[[k8s/flux.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/flux.md)] --- ## Did it work? -- - No! -- - Why? -- - We need to indicate the namespace where the app should be deployed - Either in the YAML manifests - Or in the `kustomization` custom resource (using field `spec.targetNamespace`) - Add the namespace to the manifest and try again! .debug[[k8s/flux.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/flux.md)] --- ## Adding an app in a reusable way - Let's see a technique to add a whole app (with multiple resource manifets) - We want to minimize code repetition (i.e. easy to add on multiple clusters with minimal changes) .debug[[k8s/flux.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/flux.md)] --- ## The plan - Add the app manifests in a directory (e.g.: `apps/myappname/manifests`) - Create a kustomization manifest for the app and its namespace (e.g.: `apps/myappname/flux.yaml`) - The kustomization manifest will refer to the app manifest - Add the kustomization manifest to the top-level `flux-system` kustomization .debug[[k8s/flux.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/flux.md)] --- ## Creating the manifests - All commands below should be executed at the root of the repository .lab[ - Put application manifests in their directory: ```bash mkdir -p apps/dockercoins/manifests cp ~/container.training/k8s/dockercoins.yaml apps/dockercoins/manifests ``` - Create kustomization manifest: ```bash flux create kustomization dockercoins \ --source=GitRepository/flux-system \ --path=./apps/dockercoins/manifests/ \ --target-namespace=dockercoins \ --prune=true --export > apps/dockercoins/flux.yaml ``` ] .debug[[k8s/flux.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/flux.md)] --- ## Creating the target namespace - When deploying *helm releases*, it is possible to automatically create the namespace - When deploying *kustomizations*, we need to create it explicitly - Let's put the namespace with the kustomization manifest (so that the whole app can be mediated through a single manifest) .lab[ - Add the target namespace to the kustomization manifest: ```bash echo "--- kind: Namespace apiVersion: v1 metadata: name: dockercoins" >> apps/dockercoins/flux.yaml ``` ] .debug[[k8s/flux.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/flux.md)] --- ## Linking the kustomization manifest - Edit `clusters/dev/flux-system/kustomization.yaml` - Add a line to reference the kustomization manifest that we created: ```yaml - ../../../apps/dockercoins/flux.yaml ``` - `git add` our manifests, `git commit`, `git push` (check with `git status` that we haven't forgotten anything!) - `flux reconcile` or wait for the changes to be picked up .debug[[k8s/flux.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/flux.md)] --- ## Installing with Helm - We're going to see two different workflows: - installing a third-party chart <br/> (e.g. something we found on the Artifact Hub) - installing one of our own charts <br/> (e.g. a chart we authored ourselves) - The procedures are very similar .debug[[k8s/flux.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/flux.md)] --- ## Installing from a public Helm repository - Let's install [kube-prometheus-stack][kps] .lab[ - Create the Flux manifests: ```bash mkdir -p apps/kube-prometheus-stack flux create source helm kube-prometheus-stack \ --url=https://prometheus-community.github.io/helm-charts \ --export >> apps/kube-prometheus-stack/flux.yaml flux create helmrelease kube-prometheus-stack \ --source=HelmRepository/kube-prometheus-stack \ --chart=kube-prometheus-stack --release-name=kube-prometheus-stack \ --target-namespace=kube-prometheus-stack --create-target-namespace \ --export >> apps/kube-prometheus-stack/flux.yaml ``` ] [kps]: https://artifacthub.io/packages/helm/prometheus-community/kube-prometheus-stack .debug[[k8s/flux.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/flux.md)] --- ## Enable the app - Just like before, link the manifest from the top-level kustomization (`flux-system` in namespace `flux-system`) - `git add` / `git commit` / `git push` - We should now have a Prometheus+Grafana observability stack! .debug[[k8s/flux.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/flux.md)] --- ## Installing from a Helm chart in a git repo - In this example, the chart will be in the same repo - In the real world, it will typically be in a different repo! .lab[ - Generate a basic Helm chart: ```bash mkdir -p charts helm create charts/myapp ``` ] (This generates a chart which installs NGINX. A lot of things can be customized, though.) .debug[[k8s/flux.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/flux.md)] --- ## Creating the Flux manifests - The invocation is very similar to our first example .lab[ - Generate the Flux manifest for the Helm release: ```bash mkdir apps/myapp flux create helmrelease myapp \ --source=GitRepository/flux-system \ --chart=charts/myapp \ --target-namespace=myapp --create-target-namespace \ --export > apps/myapp/flux.yaml ``` - Add a reference to that manifest to the top-level kustomization - `git add` / `git commit` / `git push` the chart, manifest, and kustomization ] .debug[[k8s/flux.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/flux.md)] --- ## Passing values - We can also configure our Helm releases with values - Using an existing `myvalues.yaml` file: `flux create helmrelease ... --values=myvalues.yaml` - Referencing an existing ConfigMap or Secret with a `values.yaml` key: `flux create helmrelease ... --values-from=ConfigMap/myapp` - The ConfigMap or Secret must be in the same Namespace as the HelmRelease (not the target namespace of that HelmRelease!) .debug[[k8s/flux.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/flux.md)] --- ## Gotchas - When creating a HelmRelease using a chart stored in a git repository, you must: - either bump the chart version (in `Chart.yaml`) after each change, - or set `spec.chart.spec.reconcileStrategy` to `Revision` - Why? - Flux installs helm releases using packaged artifacts - Artifacts are updated only when the Helm chart version changes - Unless `reconcileStrategy` is set to `Revision` (instead of the default `ChartVersion`) .debug[[k8s/flux.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/flux.md)] --- ## More gotchas - There is a bug in Flux that prevents using identical subcharts with aliases - See [fluxcd/flux2#2505][flux2505] for details [flux2505]: https://github.com/fluxcd/flux2/discussions/2505 .debug[[k8s/flux.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/flux.md)] --- ## Things that we didn't talk about... - Bucket sources - Image automation controller - Image reflector controller - And more! ??? :EN:- Implementing gitops with Flux :FR:- Workflow gitops avec Flux <!-- helm upgrade --install --repo https://dl.gitea.io/charts --namespace gitea --create-namespace gitea gitea \ --set persistence.enabled=false \ --set redis-cluster.enabled=false \ --set postgresql-ha.enabled=false \ --set postgresql.enabled=true \ --set gitea.config.session.PROVIDER=db \ --set gitea.config.cache.ADAPTER=memory \ # ### Boostrap Flux controllers ```bash mkdir -p flux/flux-system/gotk-components.yaml flux install --export > flux/flux-system/gotk-components.yaml kubectl apply -f flux/flux-system/gotk-components.yaml ``` ### Bootstrap GitRepository/Kustomization ```bash export REPO_URL="<gitlab_url>" DEPLOY_USERNAME="<username>" read -s DEPLOY_TOKEN flux create secret git flux-system --url="${REPO_URL}" --username="${DEPLOY_USERNAME}" --password="${DEPLOY_TOKEN}" flux create source git flux-system --url=$REPO_URL --branch=main --secret-ref flux-system --ignore-paths='/*,!/flux' --export > flux/flux-system/gotk-sync.yaml flux create kustomization flux-system --source=GitRepository/flux-system --path="./flux" --prune=true --export >> flux/flux-system/gotk-sync.yaml git add flux/ && git commit -m 'feat: Setup Flux' flux/ && git push kubectl apply -f flux/flux-system/gotk-sync.yaml ``` --> .debug[[k8s/flux.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/flux.md)] --- class: pic .interstitial[] --- name: toc-argocd class: title ArgoCD .nav[ [Previous part](#toc-fluxcd) | [Back to table of contents](#toc-part-3) | [Next part](#toc-) ] .debug[(automatically generated title slide)] --- # ArgoCD - We're going to implement a basic GitOps workflow with ArgoCD - Pushing to the default branch will automatically deploy to our clusters - There will be two clusters (`dev` and `prod`) - The two clusters will have similar (but slightly different) workloads  .debug[[k8s/argocd.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/argocd.md)] --- ## ArgoCD concepts ArgoCD manages **applications** by **syncing** their **live state** with their **target state**. - **Application**: a group of Kubernetes resources managed by ArgoCD. <br/> Also a custom resource (`kind: Application`) managing that group of resources. - **Application source type**: the **Tool** used to build the application (Kustomize, Helm...) - **Target state**: the desired state of an **application**, as represented by the git repository. - **Live state**: the current state of the application on the cluster. - **Sync status**: whether or not the live state matches the target state. - **Sync**: the process of making an application move to its target state. <br/> (e.g. by applying changes to a Kubernetes cluster) (Check [ArgoCD core concepts](https://argo-cd.readthedocs.io/en/stable/core_concepts/) for more definitions!) .debug[[k8s/argocd.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/argocd.md)] --- ## Getting ready - Let's make sure we have two clusters - It's OK to use local clusters (kind, minikube...) - We need to install the ArgoCD CLI ([argocd-packages], [argocd-binaries]) - **Highly recommended:** set up CLI completion! - Of course we'll need a Git service, too .debug[[k8s/argocd.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/argocd.md)] --- ## Setting up ArgoCD - The easiest way is to use upstream YAML manifests - There is also a [Helm chart][argocd-helmchart] if we need more customization .lab[ - Create a namespace for ArgoCD and install it there: ```bash kubectl create namespace argocd kubectl apply --namespace argocd -f \ https://raw.githubusercontent.com/argoproj/argo-cd/stable/manifests/install.yaml ``` ] .debug[[k8s/argocd.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/argocd.md)] --- ## Logging in with the ArgoCD CLI - The CLI can talk to the ArgoCD API server or to the Kubernetes API server - For simplicity, we're going to authenticate and communicate with the Kubernetes API .lab[ - Authenticate with the ArgoCD API (that's what the `--core` flag does): ```bash argocd login --core ``` - Check that everything is fine: ```bash argocd version ``` ] -- 🤔 `FATA[0000] error retrieving argocd-cm: configmap "argocd-cm" not found` .debug[[k8s/argocd.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/argocd.md)] --- ## ArgoCD CLI shortcomings - When using "core" authentication, the ArgoCD CLI uses our current Kubernetes context (as defined in our kubeconfig file) - That context need to point to the correct namespace (the namespace where we installed ArgoCD) - In fact, `argocd login --core` doesn't communicate at all with ArgoCD! (it only updates a local ArgoCD configuration file) .debug[[k8s/argocd.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/argocd.md)] --- ## Trying again in the right namespace - We will need to run all `argocd` commands in the `argocd` namespace (this limitation only applies to "core" authentication; see [issue 14167][issue14167]) .lab[ - Switch to the `argocd` namespace: ```bash kubectl config set-context --current --namespace argocd ``` - Check that we can communicate with the ArgoCD API now: ```bash argocd version ``` ] - Let's have a look at ArgoCD architecture! .debug[[k8s/argocd.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/argocd.md)] --- class: pic  .debug[[k8s/argocd.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/argocd.md)] --- ## ArgoCD API Server The API server is a gRPC/REST server which exposes the API consumed by the Web UI, CLI, and CI/CD systems. It has the following responsibilities: - application management and status reporting - invoking of application operations (e.g. sync, rollback, user-defined actions) - repository and cluster credential management (stored as K8s secrets) - authentication and auth delegation to external identity providers - RBAC enforcement - listener/forwarder for Git webhook events .debug[[k8s/argocd.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/argocd.md)] --- ## ArgoCD Repository Server The repository server is an internal service which maintains a local cache of the Git repositories holding the application manifests. It is responsible for generating and returning the Kubernetes manifests when provided the following inputs: - repository URL - revision (commit, tag, branch) - application path - template specific settings: parameters, helm values... .debug[[k8s/argocd.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/argocd.md)] --- ## ArgoCD Application Controller The application controller is a Kubernetes controller which continuously monitors running applications and compares the current, live state against the desired target state (as specified in the repo). It detects *OutOfSync* application state and optionally takes corrective action. It is responsible for invoking any user-defined hooks for lifecycle events (*PreSync, Sync, PostSync*). .debug[[k8s/argocd.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/argocd.md)] --- ## Preparing a repository for ArgoCD - We need a repository with Kubernetes YAML manifests - You can fork [kubercoins] or create a new, empty repository - If you create a new, empty repository, add some manifests to it .debug[[k8s/argocd.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/argocd.md)] --- ## Add an Application - An Application can be added to ArgoCD via the web UI or the CLI (either way, this will create a custom resource of `kind: Application`) - The Application should then automatically be deployed to our cluster (the application manifests will be "applied" to the cluster) .lab[ - Let's use the CLI to add an Application: ```bash argocd app create kubercoins \ --repo https://github.com/`<your_user>/<your_repo>`.git \ --path . --revision `<branch>` \ --dest-server https://kubernetes.default.svc \ --dest-namespace kubercoins-prod ``` ] .debug[[k8s/argocd.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/argocd.md)] --- ## Checking progress - We can see sync status in the web UI or with the CLI .lab[ - Let's check app status with the CLI: ```bash argocd app list ``` - We can also check directly with the Kubernetes CLI: ```bash kubectl get applications ``` ] - The app is there and it is `OutOfSync`! .debug[[k8s/argocd.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/argocd.md)] --- ## Manual sync with the CLI - By default the "sync policy" is `manual` - It can also be set to `auto`, which would check the git repository every 3 minutes (this interval can be [configured globally][pollinginterval]) - Manual sync can be triggered with the CLI .lab[ - Let's force an immediate sync of our app: ```bash argocd app sync kubercoins ``` ] 🤔 We're getting errors! .debug[[k8s/argocd.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/argocd.md)] --- ## Sync failed We should receive a failure: `FATA[0000] Operation has completed with phase: Failed` And in the output, we see more details: `Message: one or more objects failed to apply,` <br/> `reason: namespaces "kubercoins-prod" not found` .debug[[k8s/argocd.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/argocd.md)] --- ## Creating the namespace - There are multiple ways to achieve that - We could generate a YAML manifest for the namespace and add it to the git repository - Or we could use "Sync Options" so that ArgoCD creates it automatically! - ArgoCD provides many "Sync Options" to handle various edge cases - Some [others](https://argo-cd.readthedocs.io/en/stable/user-guide/sync-options/) are: `FailOnSharedResource`, `PruneLast`, `PrunePropagationPolicy`... .debug[[k8s/argocd.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/argocd.md)] --- ## Editing the app's sync options - This can be done through the web UI or the CLI .lab[ - Let's use the CLI once again: ```bash argocd app edit kubercoins ``` - Add the following to the YAML manifest, at the root level: ```yaml syncPolicy: syncOptions: - CreateNamespace=true ``` ] .debug[[k8s/argocd.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/argocd.md)] --- ## Sync again .lab[ - Let's retry the sync operation: ```bash argocd app sync kubercoins ``` - And check the application status: ```bash argocd app list kubectl get applications ``` ] - It should show `Synced` and `Progressing` - After a while (when all pods are running correctly) it should be `Healthy` .debug[[k8s/argocd.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/argocd.md)] --- ## Managing Applications via the Web UI - ArgoCD is popular in large part due to its browser-based UI - Let's see how to manage Applications in the web UI .lab[ - Expose the web dashboard on a local port: ```bash argocd admin dashboard ``` - This command will show the dashboard URL; open it in a browser - Authentication should be automatic ] Note: `argocd admin dashboard` is similar to `kubectl port-forward` or `kubectl-proxy`. (The dashboard remains available as long as `argocd admin dashboard` is running.) .debug[[k8s/argocd.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/argocd.md)] --- ## Adding a staging Application - Let's add another Application for a staging environment - First, create a new branch (e.g. `staging`) in our kubercoins fork - Then, in the ArgoCD web UI, click on the "+ NEW APP" button (on a narrow display, it might just be "+", right next to buttons looking like 🔄 and ↩️) - See next slides for details about that form! .debug[[k8s/argocd.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/argocd.md)] --- ## Defining the Application | Field | Value | |------------------|--------------------------------------------| | Application Name | `kubercoins-stg` | | Project Name | `default` | | Sync policy | `Manual` | | Sync options | check `auto-create namespace` | | Repository URL | `https://github.com/<username>/<reponame>` | | Revision | `<branchname>` | | Path | `.` | | Cluster URL | `https://kubernetes.default.svc` | | Namespace | `kubercoins-stg` | Then click on the "CREATE" button (top left). .debug[[k8s/argocd.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/argocd.md)] --- ## Synchronizing the Application - After creating the app, it should now show up in the app tiles (with a yellow outline to indicate that it's out of sync) - Click on the "SYNC" button on the app tile to show the sync panel - In the sync panel, click on "SYNCHRONIZE" - The app will start to synchronize, and should become healthy after a little while .debug[[k8s/argocd.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/argocd.md)] --- ## Making changes - Let's make changes to our application manifests and see what happens .lab[ - Make a change to a manifest (for instance, change the number of replicas of a Deployment) - Commit that change and push it to the staging branch - Check the application sync status: ```bash argocd app list ``` ] - After a short period of time (a few minutes max) the app should show up "out of sync" .debug[[k8s/argocd.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/argocd.md)] --- ## Automated synchronization - We don't want to manually sync after every change (that wouldn't be true continuous deployment!) - We're going to enable "auto sync" - Note that this requires much more rigorous testing and observability! (we need to be sure that our changes won't crash our app or even our cluster) - Argo project also provides [Argo Rollouts][rollouts] (a controller and CRDs to provide blue-green, canary deployments...) - Today we'll just turn on automated sync for the staging namespace .debug[[k8s/argocd.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/argocd.md)] --- ## Enabling auto-sync - In the web UI, go to *Applications* and click on *kubercoins-stg* - Click on the "DETAILS" button (top left, might be just a "i" sign on narrow displays) - Click on "ENABLE AUTO-SYNC" (under "SYNC POLICY") - After a few minutes the changes should show up! .debug[[k8s/argocd.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/argocd.md)] --- ## Rolling back - If we deploy a broken version, how do we recover? - "The GitOps way": revert the changes in source control (see next slide) - Emergency rollback: - disable auto-sync (if it was enabled) - on the app page, click on "HISTORY AND ROLLBACK" <br/> (with the clock-with-backward-arrow icon) - click on the "..." button next to the button we want to roll back to - click "Rollback" and confirm .debug[[k8s/argocd.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/argocd.md)] --- ## Rolling back with GitOps - The correct way to roll back is rolling back the code in source control ```bash git checkout staging git revert HEAD git push origin staging ``` .debug[[k8s/argocd.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/argocd.md)] --- ## Working with Helm - ArgoCD supports different tools to process Kubernetes manifests: Kustomize, Helm, Jsonnet, and [Config Management Plugins][cmp] - Let's how to deploy Helm charts with ArgoCD! - In the [kubercoins] repository, there is a branch called [helm-branch] - It provides a generic Helm chart, in the [generic-service] directory - There are service-specific values YAML files in the [values] directory - Let's create one application for each of the 5 components of our app! .debug[[k8s/argocd.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/argocd.md)] --- ## Creating a Helm Application - The example below uses "upstream" kubercoins - Feel free to use your own fork instead! .lab[ - Create an Application for `hasher`: ```bash argocd app create hasher \ --repo https://github.com/jpetazzo/kubercoins.git \ --path generic-service --revision helm \ --dest-server https://kubernetes.default.svc \ --dest-namespace kubercoins-helm \ --sync-option CreateNamespace=true \ --values ../values/hasher.yaml \ --sync-policy=auto ``` ] .debug[[k8s/argocd.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/argocd.md)] --- ## Deploying the rest of the application - Option 1: repeat the previous command (updating app name and values) - Option 2: author YAML manifests and apply them .debug[[k8s/argocd.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/argocd.md)] --- ## Additional considerations - When running in production, ArgoCD can be integrated with an [SSO provider][sso] - ArgoCD embeds and bundles [Dex] to delegate authentication - it can also use an existing OIDC provider (Okta, Keycloak...) - A single ArgoCD instance can manage multiple clusters (but it's also fine to have one ArgoCD per cluster) - ArgoCD can be complemented with [Argo Rollouts][rollouts] for advanced rollout control (blue/green, canary...) .debug[[k8s/argocd.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/argocd.md)] --- ## Acknowledgements Many thanks to Anton (Ant) Weiss ([antweiss.com](https://antweiss.com), [@antweiss](https://twitter.com/antweiss)) and Guilhem Lettron for contributing an initial version and suggestions to this ArgoCD chapter. All remaining typos, mistakes, or approximations are mine (Jérôme Petazzoni). [argocd-binaries]: https://github.com/argoproj/argo-cd/releases/latest [argocd-helmchart]: https://artifacthub.io/packages/helm/argo/argocd-apps [argocd-packages]: https://argo-cd.readthedocs.io/en/stable/cli_installation/ [cmp]: https://argo-cd.readthedocs.io/en/stable/operator-manual/config-management-plugins/ [Dex]: https://github.com/dexidp/dex [generic-service]: https://github.com/jpetazzo/kubercoins/tree/helm/generic-service [helm-branch]: https://github.com/jpetazzo/kubercoins/tree/helm [issue14167]: https://github.com/argoproj/argo-cd/issues/14167 [kubercoins]: https://github.com/jpetazzo/kubercoins [pollinginterval]: https://argo-cd.readthedocs.io/en/stable/faq/#how-often-does-argo-cd-check-for-changes-to-my-git-or-helm-repository [rollouts]: https://argoproj.github.io/rollouts/ [sso]: https://argo-cd.readthedocs.io/en/stable/operator-manual/user-management/#sso [values]: https://github.com/jpetazzo/kubercoins/tree/helm/values ??? :EN:- Implementing gitops with ArgoCD :FR:- Workflow gitops avec ArgoCD .debug[[k8s/argocd.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/k8s/argocd.md)] --- class: title Merci !  .debug[[shared/thankyou.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/shared/thankyou.md)] --- ## Derniers mots... - Le portail de formation reste en ligne après la formation - N'hésitez pas à nous contacter via la messagerie instantanée ! - Les VM ENIX restent en ligne au moins une semaine après la formation (mais pas les clusters cloud ; eux on les éteint très vite) - N'oubliez pas de remplier les formulaires d'évaluation (c'est pas pour nous, c'est une obligation légale😅) - Encore **merci** à vous ! .debug[[shared/thankyou.md](https://github.com/jpetazzo/container.training/tree/2025-01-enix/slides/shared/thankyou.md)]